The Privacy Assessment Paradox: How to Evaluate What You Don't Yet Understand

The Bottom Line Up Front: You don't need to be a legal expert to lead effective privacy initiatives—you need to be a systems thinker who can ask the right questions and recognize patterns of privacy risk. The most dangerous privacy violations often happen in the gaps between what's legally required and what's ethically necessary, and identifying these gaps requires a different kind of assessment than traditional compliance audits provide.

The Expert Trap: Why Legal Knowledge Isn't Enough

Picture this scene: A company decides it needs to "get serious about privacy." The first move? Hire a lawyer specializing in privacy law. Six months later, they have pristine privacy policies, detailed data processing agreements, and comprehensive compliance documentation. The legal expert declares them ready for any regulatory inspection.

Then the breach happens. Not a technical breach—those security systems held fine. Not a legal breach—every regulation was followed to the letter. It was a trust breach. The company used customer data in ways that were legally permissible but ethically questionable, and when customers found out, the damage to reputation and relationships was catastrophic.

This scenario plays out repeatedly because we've fallen into what I call the "expert trap"—the belief that privacy is primarily a legal domain requiring legal expertise. As danah boyd observes in It's Complicated, "Privacy is not simply about control over information; it's about control over social situations." Legal frameworks can't capture the full complexity of social expectations and contextual norms that determine whether people feel their privacy has been respected or violated.

The paradox is this: The people best positioned to identify real privacy risks often aren't privacy lawyers. They're the product managers who understand how features might be misused. They're the data scientists who see patterns that could reveal more than intended. They're the customer service representatives who hear what actually worries people about data collection.

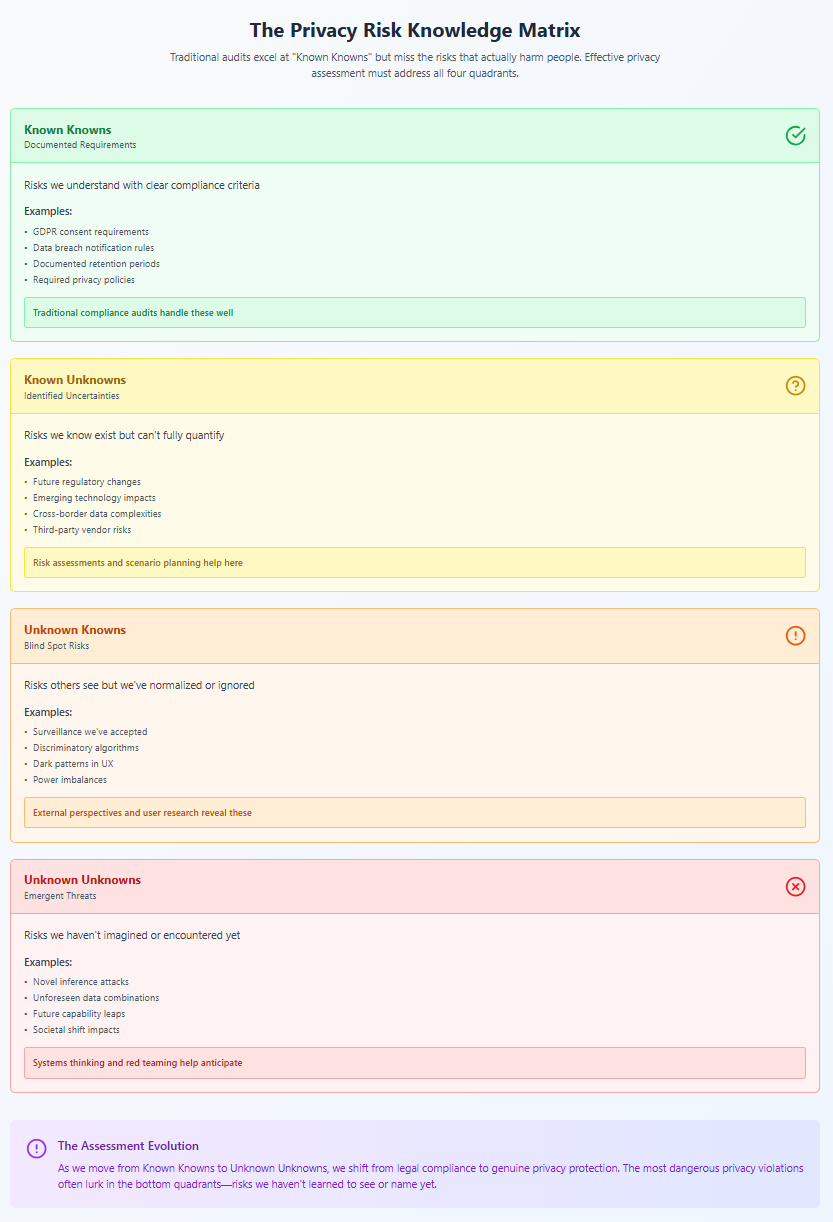

The Assessment Challenge: Known Unknowns and Unknown Unknowns

Donald Rumsfeld's famous framework of "known knowns, known unknowns, and unknown unknowns" applies perfectly to privacy assessment. Traditional privacy audits excel at addressing known knowns—documented requirements with clear compliance criteria. They're reasonably good at known unknowns—identified risks that need evaluation. But they're terrible at unknown unknowns—the privacy risks we haven't yet learned to recognize.

As Frank Pasquale writes in The Black Box Society, "We are increasingly ruled by algorithms we don't understand, which make decisions about us that we cannot contest, based on data we didn't know was being collected." How do you assess risks in systems you can't see, using frameworks that haven't been invented, for harms that haven't yet been named?

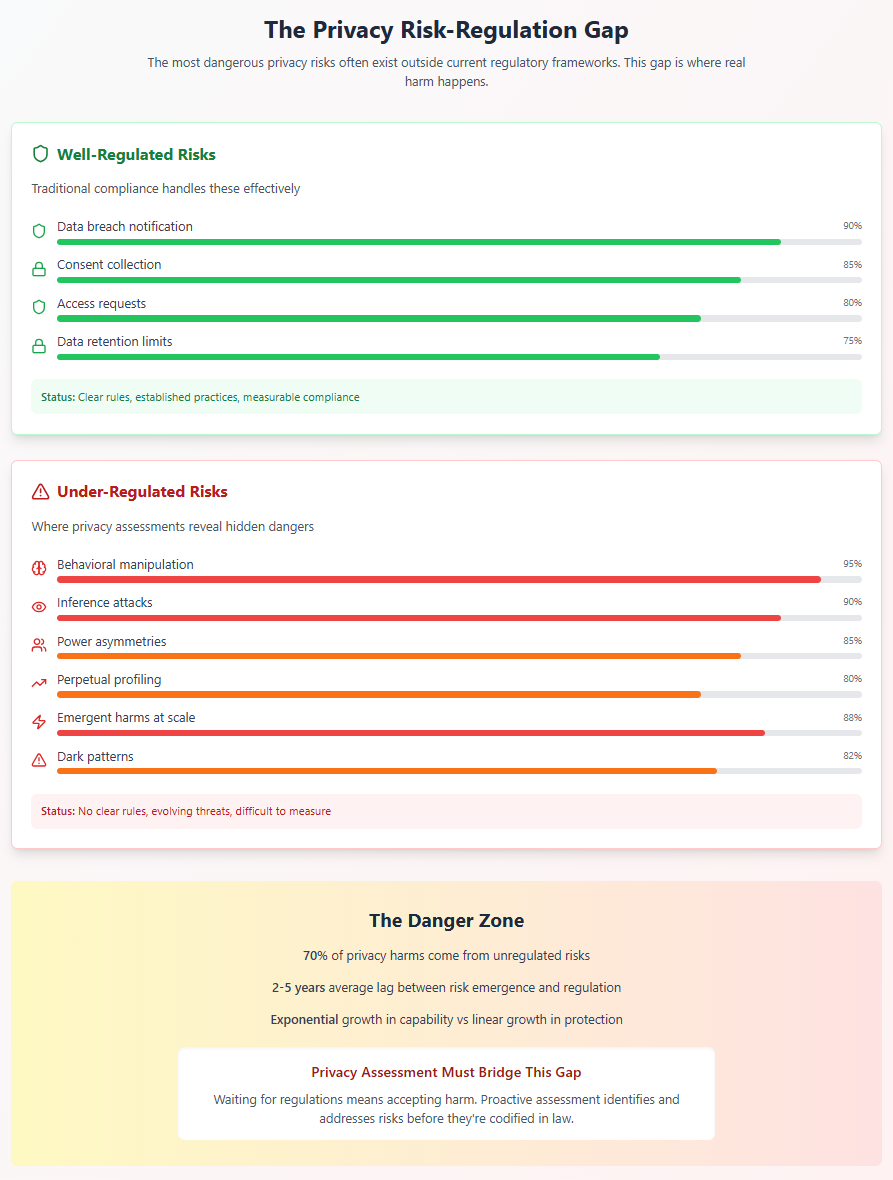

The Regulatory Lag Problem

Consider facial recognition technology. By the time regulations caught up to its privacy implications, it had already been deployed in countless contexts—retail stores tracking customers, employers monitoring workers, law enforcement building databases of citizens. The privacy assessments conducted during early deployments couldn't evaluate risks that weren't yet understood or frameworks that didn't yet exist.

This isn't unusual—it's the norm. As Cathy O'Neil documents in Weapons of Math Destruction, "The math-powered applications powering the data economy were based on choices made by fallible human beings. Some of these choices were no doubt made with the best intentions. Nevertheless, many of these models encoded human prejudice, misunderstanding, and bias into the software systems that increasingly managed our lives."

Traditional privacy assessments ask: "Are we compliant with current regulations?" But regulations are always fighting the last war. By the time a practice is regulated, the damage is often done, and new practices have emerged that create similar risks in different ways.

The Context Collapse Problem

Privacy risks often emerge not from individual data points but from their combination and context. As Helen Nissenbaum explains in Privacy in Context, privacy violations occur when "information flows in ways that violate contextual informational norms." But how do you assess whether future uses of data will violate norms that might shift over time?

Consider location data. A privacy assessment might confirm that users consented to location tracking for navigation purposes. But what happens when that same data is later used to:

- Infer health conditions from visits to medical facilities

- Determine insurance rates based on driving patterns

- Target advertising based on income levels inferred from shopping locations

- Share with law enforcement without user knowledge

Each of these uses might be legally permissible under broad consent language, but they represent what Woodrow Hartzog calls "privacy's blueprint" problem in his book of the same name: "The design of technologies inevitably shapes the protection of privacy, but our law largely ignores it."

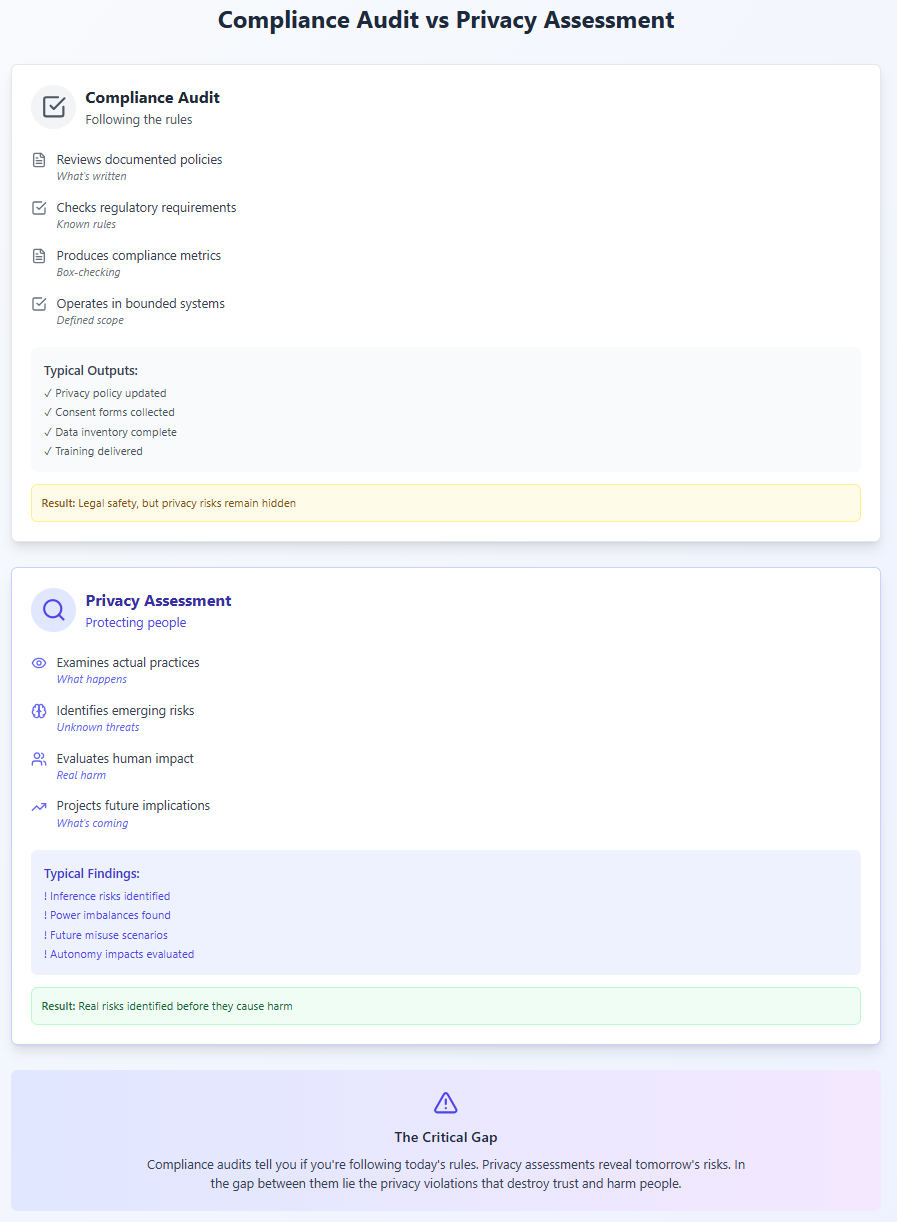

What's Different: Compliance Audit vs. Privacy Assessment

Understanding the distinction between compliance audits and genuine privacy assessments is crucial for building programs that actually protect privacy rather than just documenting adherence to rules.

Compliance Audits: Checking Boxes in a Bounded System

Compliance audits operate in what systems theorists call a "bounded system"—one with defined inputs, outputs, and rules. They ask questions like:

- Do we have privacy policies in place?

- Are we collecting consent where required?

- Do we respond to data subject requests within mandated timeframes?

- Are our data retention periods documented and followed?

- Have we conducted required privacy impact assessments?

These are important questions, but they operate within narrow parameters. As Julie Cohen observes in Configuring the Networked Self, "Privacy regulation has evolved largely by accretion, with new rules layered atop existing ones in response to particular scandals or concerns." Compliance audits check these layers of rules without examining whether the overall system respects privacy in any meaningful way.

Privacy Assessments: Evaluating Unbounded Systems

Genuine privacy assessments operate in unbounded systems—ones where the rules themselves might be inadequate, where context matters more than categories, and where future uses can't be fully predicted. They ask fundamentally different questions:

- What power dynamics does our data collection create or reinforce?

- How might our data be used in ways we haven't anticipated?

- What are the cumulative effects of our data practices on human autonomy?

- Where might our practices violate social norms even if they follow legal rules?

- How do our systems shape behavior in ways that might undermine privacy?

As Zeynep Tufekci writes in Twitter and Tear Gas, "The capacity to surveil, analyze, and use information about people has exploded, while the legal and ethical frameworks for protecting people lag behind." Privacy assessments must evaluate risks in this gap between capability and protection.

The Measurement Problem

Compliance audits produce comforting metrics—100% of policies updated, 95% of employees trained, zero regulatory violations. But what metrics capture whether privacy is actually protected?

Consider what Shoshana Zuboff calls "surveillance capitalism's" core mechanism in The Age of Surveillance Capitalism: "The extraction of human experience as free raw material for translation into behavioral data." A compliance audit might confirm that consent was obtained for this extraction. A privacy assessment would ask whether meaningful consent is even possible when people can't understand how their data will be used or what patterns it might reveal.

Identifying Invisible Risks: What Regulations Haven't Caught Up To

The most dangerous privacy risks are often those that haven't yet been named or regulated. Identifying these requires what science fiction author William Gibson called "pattern recognition"—the ability to see the future that's already here but unevenly distributed.

The Inference Problem

Modern privacy risks often come not from data explicitly collected but from inferences drawn from that data. As researchers have demonstrated, seemingly innocuous data can reveal highly sensitive information:

- Shopping patterns can predict pregnancy before the person knows

- Social media likes can infer sexual orientation with high accuracy

- Typing patterns can identify individuals across anonymous platforms

- Movement data can reveal political affiliations and social connections

Current regulations focus on "personal data" as explicitly collected information. But as Viktor Mayer-Schönberger and Kenneth Cukier note in Big Data, "In the big-data age, all data will be regarded as personal data." The question isn't what data you collect but what can be inferred from it.

The Aggregation Problem

Individual data points might be harmless, but their aggregation creates new risks. As Bruce Schneier explains in Data and Goliath, "The accumulated data can reveal incredibly sensitive things about us, even things we don't know about ourselves."

Consider employee wellness programs. Individually, tracking steps, heart rate, sleep patterns, and stress levels might seem benign. But aggregated, this data could reveal:

- Mental health conditions

- Pregnancy before announcement

- Substance use patterns

- Family problems affecting sleep

- Early signs of chronic conditions

No single regulation addresses the privacy implications of such aggregation, but the risks to employee autonomy and dignity are profound.

The Perpetuity Problem

Digital data doesn't fade like human memory. As Viktor Mayer-Schönberger argues in Delete: The Virtue of Forgetting in the Digital Age, "The default of remembering will reshape fundamental aspects of human society and identity." But our privacy frameworks assume data has natural lifecycles.

Consider hiring algorithms trained on historical data. They perpetuate past biases indefinitely, denying opportunities based on patterns that might no longer be relevant. As Cathy O'Neil documents, these "weapons of math destruction" create what she calls "pernicious feedback loops" where past disadvantage becomes future exclusion.

The Manipulation Problem

Perhaps the least regulated but most dangerous privacy risk is the use of personal data for behavioral manipulation. As Shoshana Zuboff documents, surveillance capitalism's "products and services are not the objects of value exchange but rather the means to an entirely new and lucrative marketplace in behavioral prediction."

This goes beyond targeted advertising to what researchers call "dark patterns"—design choices that manipulate users into behaviors they wouldn't freely choose. Privacy assessments must evaluate not just what data is collected but how it's used to shape behavior.

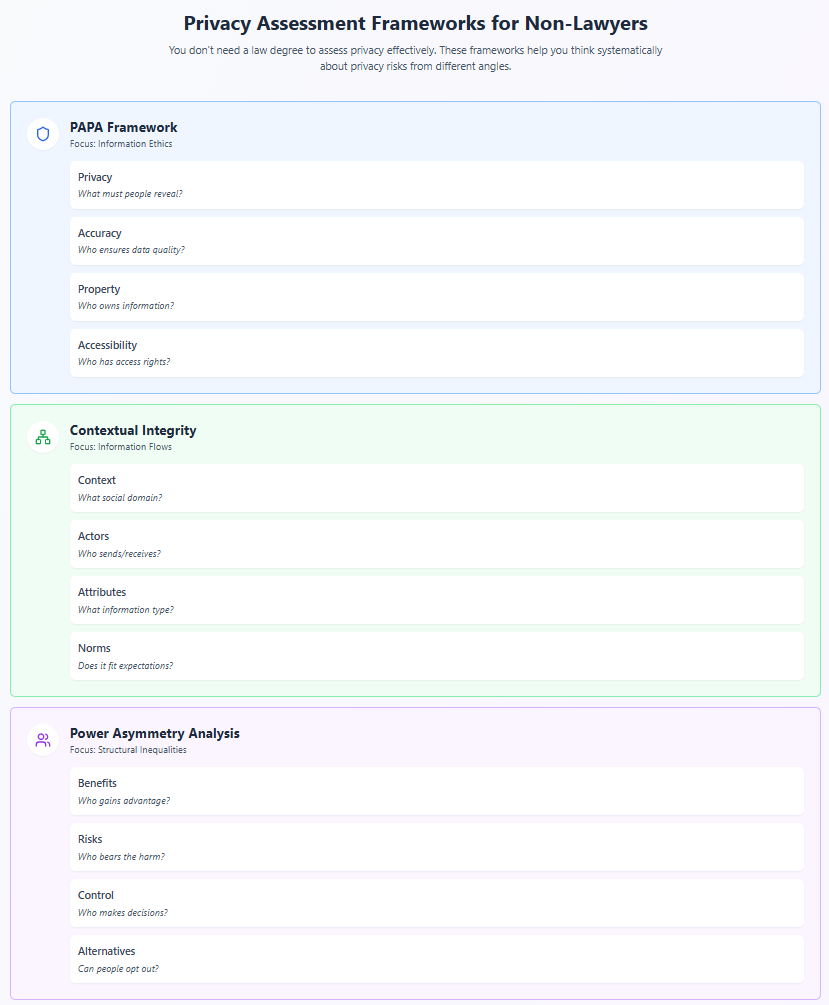

Frameworks for Non-Lawyers: Systems Thinking About Privacy

You don't need a law degree to conduct effective privacy assessments. You need frameworks that help you think systematically about privacy risks. Here are approaches that work for non-lawyers while capturing complexities that purely legal frameworks miss.

The PAPA Framework: Privacy, Accuracy, Property, Accessibility

Developed by Richard Mason in 1986 but still relevant today, PAPA provides a simple structure for thinking about information ethics:

Privacy: What information about oneself should a person be required to reveal to others? Accuracy: Who is responsible for the authenticity and fidelity of information? Property: Who owns information and how should ownership be determined? Accessibility: What information does a person or organization have a right to obtain?

This framework helps identify issues that might not appear in compliance checklists but significantly impact privacy.

The Contextual Integrity Heuristic

Based on Helen Nissenbaum's work, this framework evaluates privacy through information flows:

- Identify the context: What social domain does this information flow occur within?

- Identify actors: Who are the senders, receivers, and subjects of information?

- Identify attributes: What types of information are involved?

- Identify transmission principles: Under what terms does information flow?

- Evaluate appropriateness: Do these flows match contextual norms?

This helps identify when technically permitted uses violate social expectations.

The Power Asymmetry Analysis

This framework, inspired by critical data studies, examines how data practices affect power relationships:

- Who benefits? Who gains advantage from this data collection/use?

- Who bears risks? Who faces potential harms?

- Who has control? Who can make decisions about the data?

- Who has alternatives? Can people opt out without significant loss?

- Who has recourse? What happens when things go wrong?

This reveals privacy risks that emerge from structural inequalities rather than technical vulnerabilities.

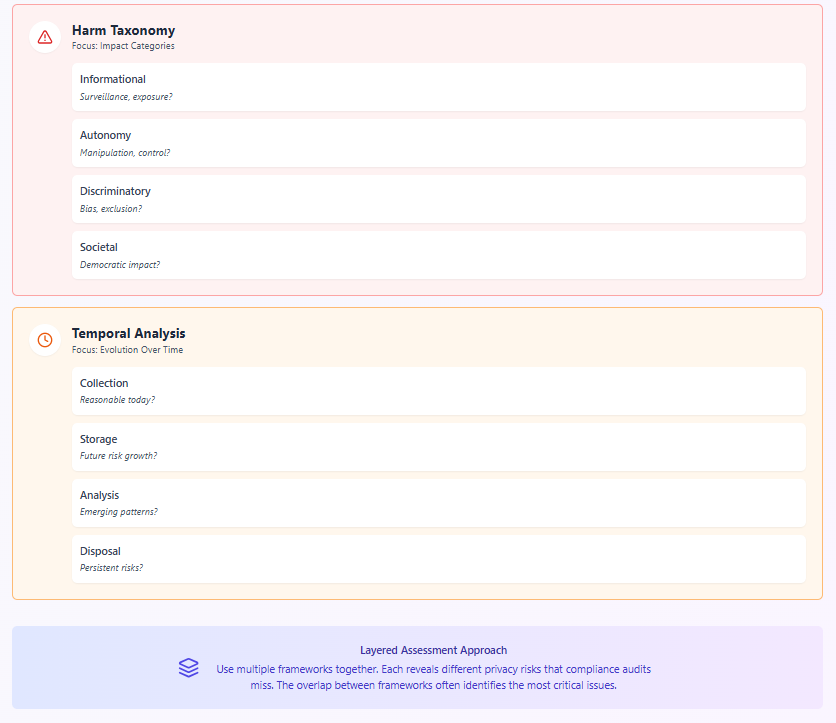

The Harm Taxonomy

Developed by privacy researchers, this framework categorizes potential privacy harms:

Informational Harms: Surveillance, interrogation, disclosure, exposure Autonomy Harms: Decisional interference, behavioral manipulation Discriminatory Harms: Bias, exclusion, differential treatment Relationship Harms: Trust breach, power imbalance, dignity violation Societal Harms: Chilling effects, democratic interference

This helps identify impacts that might not violate specific regulations but cause real damage.

The Temporal Analysis Framework

This framework examines how privacy risks evolve over time:

- Collection phase: What seems reasonable today?

- Storage phase: How might retention create future risks?

- Analysis phase: What patterns might emerge later?

- Use phase: How might purposes drift over time?

- Disposal phase: What risks persist after deletion?

This helps identify risks that emerge from data persistence and evolving capabilities.

Building Your Assessment Toolkit: Practical Approaches

Moving from frameworks to practice requires building an assessment toolkit that combines systematic analysis with practical investigation. Here's how to construct effective privacy assessments without getting lost in legal minutiae.

The Question Protocol: What to Ask

Good privacy assessment starts with asking the right questions. Here's a structured protocol:

Data Mapping Questions:

- What data do we actually collect? (Not what policies say, but what systems do)

- Where does it come from? (Direct collection, third parties, inference)

- Where does it go? (Internal uses, sharing, sales)

- How long does it persist? (Retention, backups, derived data)

- Who can access it? (Employees, partners, authorities)

Purpose Analysis Questions:

- Why do we need this specific data?

- Could we achieve our goals with less data?

- How might purposes expand over time?

- What prevents scope creep?

- When will we no longer need it?

Risk Identification Questions:

- What's the worst-case scenario for misuse?

- What would happen if this data became public?

- How might this data be combined with other data?

- What inferences could be drawn?

- Who might be harmed and how?

Control Evaluation Questions:

- Can people understand what we're doing?

- Do they have meaningful choices?

- Can they correct errors?

- Can they truly delete their data?

- What happens when they try to leave?

The Investigation Method: How to Look

Effective privacy assessment requires looking beyond documentation to actual practices:

Technical Investigation:

- Review actual data flows, not just documented ones

- Examine database schemas and API calls

- Analyze audit logs for access patterns

- Test deletion claims with actual attempts

- Monitor data sharing through network analysis

Behavioral Investigation:

- Interview employees about actual practices

- Observe decision-making processes

- Review customer complaints and concerns

- Analyze support tickets for privacy issues

- Monitor social media for user reactions

Scenario Testing:

- Walk through user journeys end-to-end

- Test edge cases and unusual situations

- Simulate data breaches and responses

- Evaluate cross-functional data uses

- Project future use cases

The Red Team Approach: Thinking Like an Adversary

Some of the best privacy assessments adopt a "red team" mentality—actively trying to find ways the system could be abused:

Adversarial Scenarios:

- How would a malicious insider exploit our data?

- What would a data broker do with our information?

- How might law enforcement interpret our data?

- What would competitors learn from our patterns?

- How could activists expose our practices?

Unintended Use Cases:

- How might features be repurposed?

- What happens when systems interact?

- How do edge cases reveal sensitive data?

- What patterns emerge at scale?

- Where do assumptions break down?

This approach often reveals risks that compliance-focused assessments miss entirely.

Case Studies: Assessments That Found What Audits Missed

Real examples illustrate how systematic privacy assessment reveals risks that traditional audits overlook.

Case 1: The Fitness App That Revealed Military Bases

In 2018, Strava's global heatmap inadvertently revealed the locations and layouts of military bases worldwide. The company had conducted standard privacy audits—users consented to data collection, the data was anonymized, sharing was optional. All boxes checked.

What a proper privacy assessment would have revealed:

- Aggregated "anonymous" data can reveal highly sensitive patterns

- Default settings matter more than optional controls

- Global visualization creates different risks than individual tracking

- Some user populations face unique vulnerabilities

The lesson: Privacy assessment must consider emergent properties of data at scale, not just individual compliance.

Case 2: The Credit Card That Predicted Divorce

Researcher Latanya Sweeney documented how credit card companies could predict divorce with high accuracy two years before it occurred, based on purchasing patterns. Traditional privacy audits showed compliance—customers agreed to purchase tracking for fraud prevention.

What privacy assessment would have revealed:

- Behavioral patterns reveal life circumstances

- Predictive models create new categories of sensitive data

- Financial disadvantage might follow life disruption

- Consent to fraud monitoring doesn't imply consent to life prediction

The lesson: Privacy assessment must evaluate inferential capabilities, not just explicit data use.

Case 3: The School Surveillance That Never Stopped

Educational technology companies provided schools with laptop monitoring software for "student safety." Privacy audits confirmed parental consent and documented educational purposes. But investigation revealed screenshots taken in bedrooms, keystroke logging of private communications, and surveillance continuing after graduation.

What privacy assessment would have revealed:

- Power imbalances make meaningful consent impossible

- Technical capabilities enable scope creep

- Surveillance normalizes in educational contexts

- Children's privacy needs special protection

The lesson: Privacy assessment must consider power dynamics and special populations, not just formal consent.

The Path Forward: Building Assessment Competence

Developing privacy assessment competence doesn't require years of legal study. It requires cultivating what we might call "privacy sense"—the ability to recognize patterns, ask good questions, and think systematically about data's impact on human autonomy.

Developing Privacy Intuition

Like any complex skill, privacy assessment improves with practice. Start by:

Reading Privacy Failures: Study documented privacy violations to understand patterns. The Privacy Rights Clearinghouse maintains extensive databases. Look not just at what went wrong but what assessment might have prevented it.

Following Privacy Researchers: People like Zeynep Tufekci, Cathy O'Neil, and Julia Angwin regularly expose privacy risks before they become scandals. Their work teaches you to see around corners.

Practicing Scenario Analysis: Take any new technology or service and ask: How could this go wrong? What would malicious use look like? What happens at scale? Who might be harmed?

Building Technical Literacy: You don't need to code, but understanding basics of databases, APIs, and machine learning helps you ask better questions and evaluate answers.

Creating Assessment Infrastructure

Effective privacy assessment needs organizational support:

Cross-Functional Teams: Include technologists, ethicists, user advocates, and business representatives. Different perspectives reveal different risks.

Regular Rhythm: Privacy assessment isn't a one-time event but an ongoing practice. Build regular reviews into product development and organizational planning.

External Input: Users, researchers, and critics often see risks you miss. Create channels for external privacy concerns and take them seriously.

Learning Loops: When privacy issues emerge, conduct retrospectives. What did we miss? Why? How do we improve our assessment process?

Moving Beyond Compliance Theater

The goal isn't perfect prediction of all privacy risks—that's impossible in complex, evolving systems. The goal is building organizational capabilities to:

- Recognize privacy risks early

- Evaluate them systematically

- Respond thoughtfully

- Learn continuously

- Prioritize human autonomy

This requires moving beyond compliance theater to genuine privacy thinking. It means accepting that legal expertise, while valuable, isn't sufficient. It means recognizing that the most dangerous privacy risks often lurk in the gaps between what's regulated and what's possible.

The Deeper Truth About Privacy Assessment

As we've explored, effective privacy assessment isn't about mastering regulations or completing compliance checklists. It's about developing systematic ways to recognize and evaluate risks to human autonomy in digital contexts. It's about asking better questions, not having all the answers.

The paradox is real: To assess privacy risks effectively, you must understand systems you can't fully see, evaluate futures you can't completely predict, and protect against harms that haven't yet been named. But this isn't cause for paralysis—it's reason for humility and systematic thinking.

You don't need to be a legal expert to lead privacy initiatives. In fact, legal expertise alone is insufficient. What you need is:

- Systems thinking to understand how components interact

- Ethical reasoning to evaluate impacts on human autonomy

- Technical literacy to ask good questions

- Social awareness to recognize contextual norms

- Intellectual humility to acknowledge what you don't know

The frameworks and approaches we've explored—from contextual integrity to power analysis, from red teaming to temporal assessment—provide structure for this work. They help you see what compliance audits miss and evaluate what regulations haven't yet addressed.

Most importantly, they help you remember what privacy assessment is really about: not checking boxes or avoiding fines, but protecting the conditions necessary for human flourishing in a digital world. When we approach privacy assessment with this understanding, we move from defensive compliance to proactive protection.

The choice is ours. We can continue treating privacy assessment as a specialized legal function, missing most of the risks that actually matter. Or we can democratize privacy thinking, building broad organizational capabilities to recognize and address risks to human autonomy wherever they emerge.

The technical challenges are real, but they're surmountable with systematic thinking and genuine commitment. The bigger challenge is cultural—moving organizations from "what must we do?" to "what should we do?" But this is precisely why non-lawyers are often better positioned to lead privacy initiatives. Unburdened by regulatory frameworks, they can ask the deeper questions that real privacy protection requires.

Privacy assessment isn't about understanding every regulation or predicting every risk. It's about building systems that respect human autonomy even as capabilities evolve and contexts shift. When we get this right, we create not just compliant organizations but trustworthy ones.

This is the second in our series exploring data privacy from philosophical and practical perspectives. Next, we'll examine how to build privacy programs that actually work—moving from assessment to implementation with structures that make privacy protection sustainable at scale.