The Next Horizon: Data Literacy in the Age of AI and Ambient Intelligence

The Bottom Line Up Front: The future of data literacy isn't about everyone becoming data scientists—it's about creating symbiotic relationships between human judgment and machine intelligence. Organizations that thrive will be those that evolve from teaching people to work with data to teaching them to collaborate with systems that work with data, while maintaining the philosophical sophistication to question, interpret, and contextualize what these systems produce.

The Paradigm Shift: From Data Literacy to Intelligence Literacy

We stand at an inflection point. For the past decade, data literacy initiatives have focused on helping humans directly manipulate and interpret data. But emerging technologies are fundamentally changing what it means to be "data literate." The question is no longer just "Can you analyze data?" but "Can you effectively collaborate with systems that analyze data for you?"

This shift doesn't diminish the importance of data literacy—it amplifies it. As AI systems become more sophisticated at processing information, the human role evolves from data processor to intelligence orchestrator. This requires not less sophistication but more: the ability to frame questions, evaluate machine-generated insights, and apply contextual judgment that algorithms cannot.

Consider the radiologist who no longer needs to manually scan thousands of images but must now evaluate AI-detected anomalies, understanding both the system's capabilities and limitations. Or the financial analyst who doesn't build models from scratch but must assess whether an AI's market predictions align with qualitative factors the system cannot perceive. These professionals need deeper, not shallower, understanding of how data becomes insight.

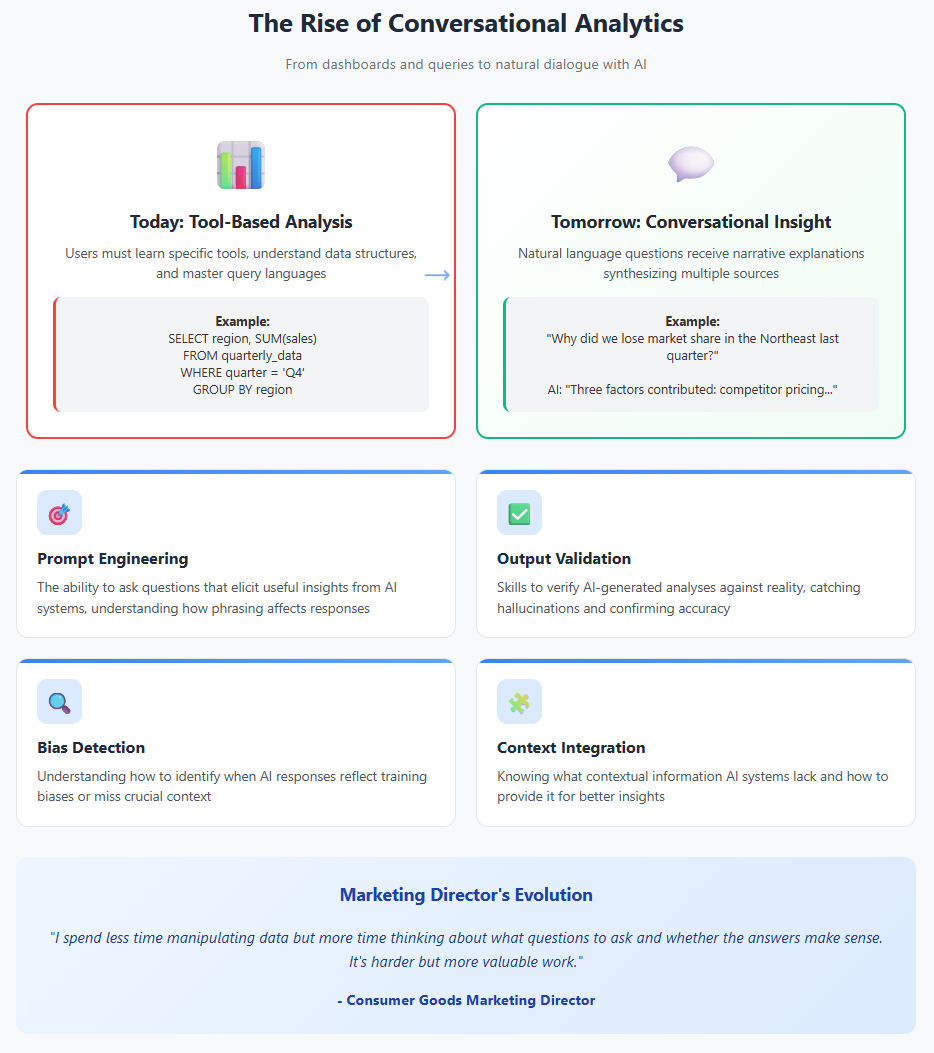

Trend 1: The Rise of Conversational Analytics

The Current Reality

Today's data literacy assumes people will interact with data through dashboards, queries, and visualizations. Users must learn specific tools, understand data structures, and master query languages. This creates a fundamental barrier: the translation cost between human questions and data answers.

The Emerging Paradigm

Large Language Models (LLMs) are demolishing this barrier. Within five years, most data interaction will be conversational. Imagine a sales manager asking, "Why did we lose market share in the Northeast last quarter?" and receiving not just numbers but a narrative explanation that synthesizes multiple data sources, highlights key factors, and suggests areas for investigation.

This isn't science fiction—early versions exist today. But the implications for data literacy are profound. As Cathy O'Neil warns in Weapons of Math Destruction, "The algorithm is only as good as the assumptions that are baked into it." When those algorithms become conversational partners, understanding their assumptions becomes even more critical.

What This Means for Data Literacy

New Competencies Required:

- Prompt Engineering: The ability to ask questions that elicit useful insights from AI systems

- Output Validation: Skills to verify AI-generated analyses against reality

- Bias Detection: Understanding how to identify when AI responses reflect training biases

- Context Integration: Knowing what contextual information AI systems lack and how to provide it

Case in Point: The Marketing Director's Evolution

A marketing director at a consumer goods company recently transitioned from spending hours in Excel to having conversational sessions with an AI analyst. But rather than making her job easier, it made it more intellectually demanding. She now needs to:

- Frame questions precisely to get actionable insights

- Recognize when the AI is hallucinating plausible but incorrect patterns

- Integrate market knowledge the AI lacks

- Validate AI suggestions against business reality

Her reflection: "I spend less time manipulating data but more time thinking about what questions to ask and whether the answers make sense. It's harder but more valuable work."

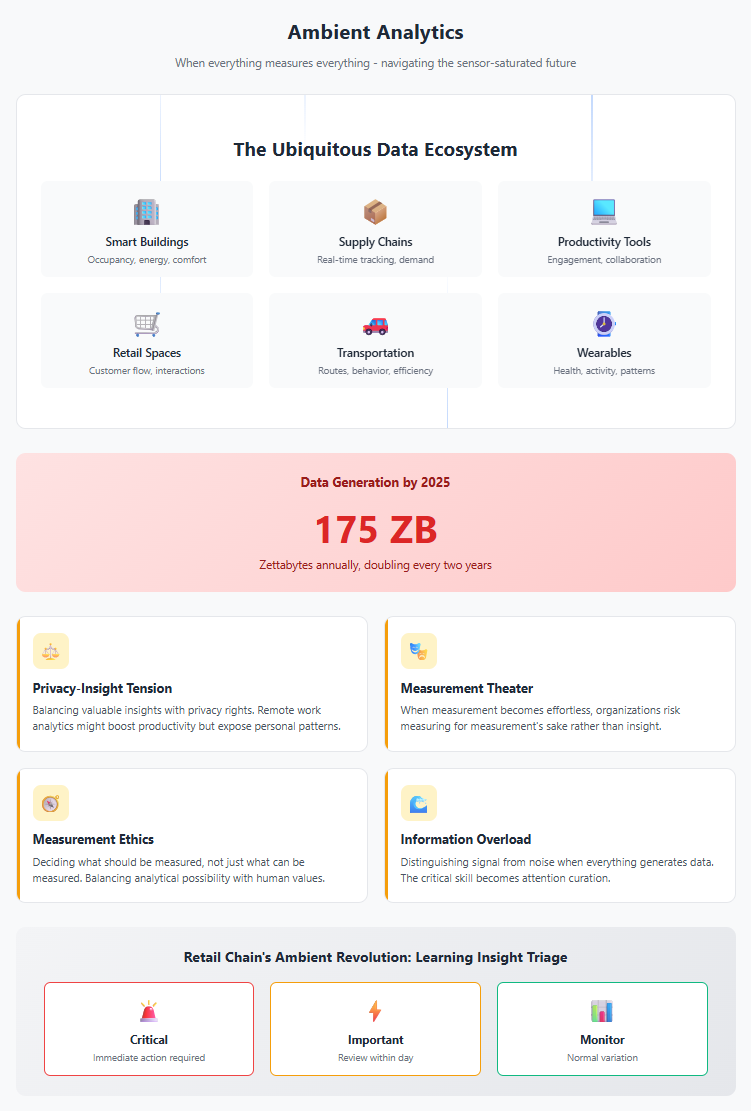

Trend 2: Ambient Analytics - When Everything Measures Everything

The Sensor-Saturated Future

We're entering an era of ambient analytics where data collection becomes invisible and ubiquitous. Smart buildings adjust based on occupancy patterns. Supply chains self-optimize based on real-time demand signals. Employee productivity tools passively measure engagement and collaboration patterns.

This creates what researchers call "data exhaust"—the continuous stream of behavioral data generated by digital interactions. The volume is overwhelming: by 2025, IDC predicts we'll generate 175 zettabytes annually, doubling every two years.

The Philosophical Challenge

This abundance creates new philosophical challenges. As Shoshana Zuboff argues in The Age of Surveillance Capitalism, "The real question is not what the data says, but who decides what questions to ask of it and for what purpose."

When everything can be measured, the critical skill becomes deciding what should be measured and why. This requires what we might call "measurement ethics"—the ability to balance analytical possibility with human values.

Organizational Implications

The Privacy-Insight Tension: Organizations will need to navigate the tension between gathering insights and respecting privacy. A workplace analytics system might reveal that remote employees are more productive, but also expose personal patterns employees didn't consent to share.

The Measurement Theater Problem: When measurement becomes effortless, organizations risk measuring for measurement's sake. The future data literate professional must distinguish between metrics that inform and metrics that merely perform.

Example: The Retail Chain's Ambient Revolution

A major retailer implemented ambient analytics across 500 stores—cameras tracking customer flow, sensors monitoring product interaction, systems analyzing purchase patterns in real-time. The technical implementation was flawless. The human challenge was harder.

Store managers, overwhelmed by continuous insights, initially tried to respond to every anomaly. Stress levels soared. The solution came from teaching "insight triage"—helping managers distinguish between signals requiring action and normal variation. Data literacy evolved from reading dashboards to curating attention.

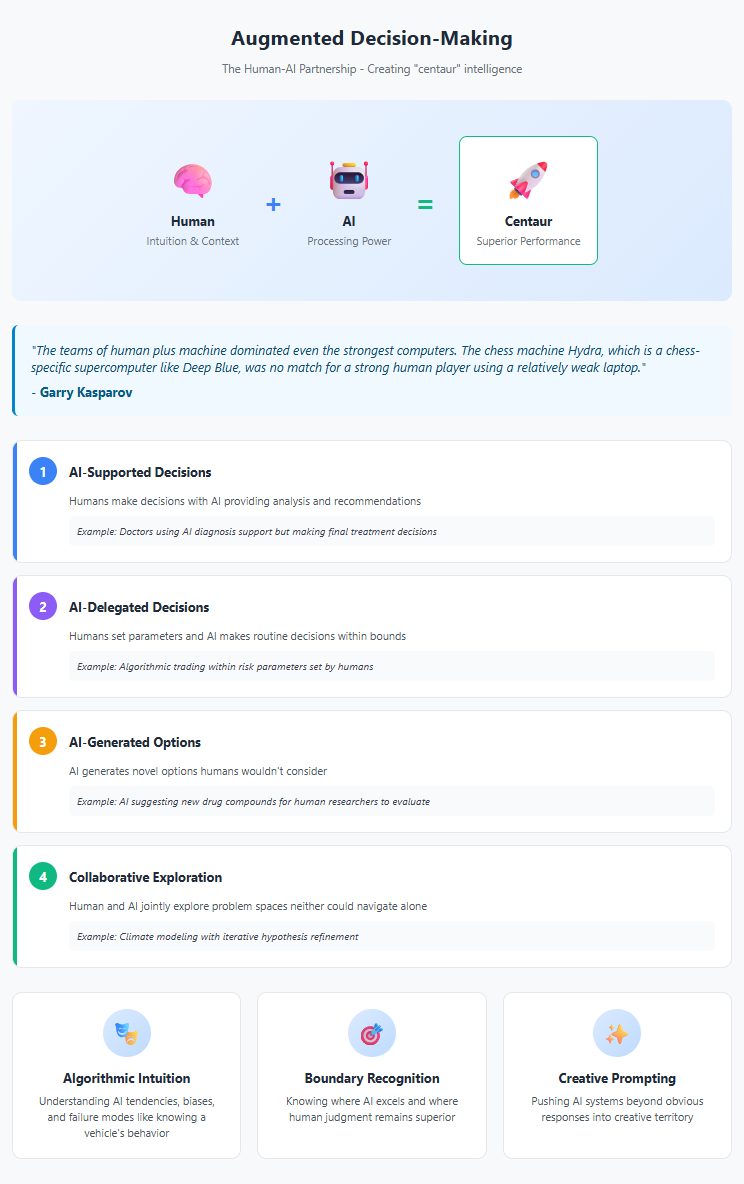

Trend 3: Augmented Decision-Making - The Human-AI Partnership

Beyond Automation to Augmentation

The narrative of AI replacing human decision-makers misses the more interesting story: AI augmenting human judgment in ways that make both more powerful. This isn't about machines making decisions for us but about creating what researchers call "centaur" decision-making—combining human intuition with machine processing power.

As Garry Kasparov observed after his famous chess matches with Deep Blue, "The teams of human plus machine dominated even the strongest computers. The chess machine Hydra, which is a chess-specific supercomputer like Deep Blue, was no match for a strong human player using a relatively weak laptop."

The New Decision Architecture

Future organizations will need decision architectures that optimize human-AI collaboration:

Level 1 - AI-Supported Decisions: Humans make decisions with AI providing analysis and recommendations. Example: Doctors using AI diagnosis support but making final treatment decisions.

Level 2 - AI-Delegated Decisions: Humans set parameters and AI makes routine decisions within bounds. Example: Algorithmic trading within risk parameters set by humans.

Level 3 - AI-Generated Options: AI generates novel options humans wouldn't consider. Example: AI suggesting new drug compounds for human researchers to evaluate.

Level 4 - Collaborative Exploration: Human and AI jointly explore problem spaces neither could navigate alone. Example: Climate modeling where human scientists and AI systems iteratively refine hypotheses.

Required Meta-Literacy Skills

This partnership model requires what we might call "meta-literacy"—literacy about literacy itself:

Algorithmic Intuition: Understanding not the technical details of how algorithms work but their tendencies, biases, and failure modes. Like experienced drivers who develop intuition about their vehicle's behavior, future professionals need intuition about their AI partners' behaviors.

Boundary Recognition: Knowing where AI excels and where human judgment remains superior. AI might identify patterns in customer behavior, but humans understand why customers feel frustrated when those patterns are exploited.

Creative Prompting: The ability to push AI systems beyond their obvious responses into creative territory. This is less about technical skill than imaginative thinking about how to combine human creativity with machine capability.

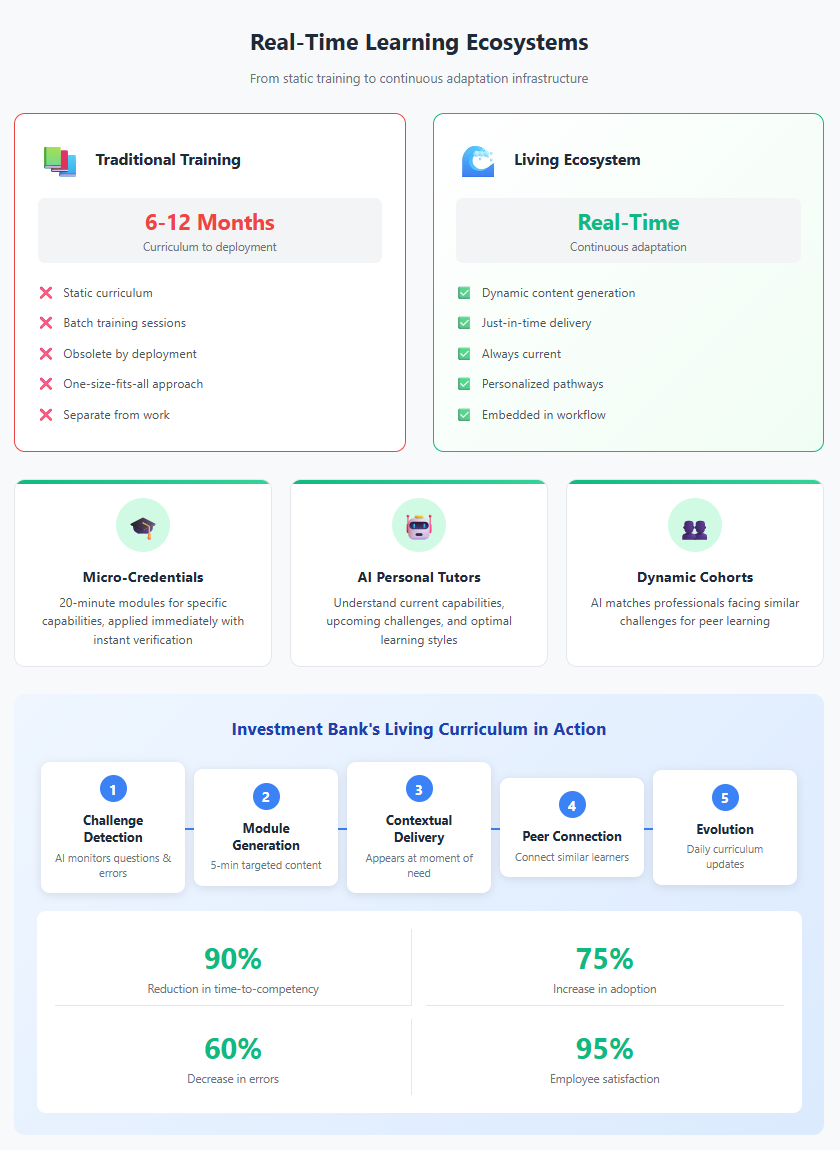

Trend 4: Real-Time Learning Ecosystems

The Death of Traditional Training

The half-life of specific technical skills continues to shrink. By the time a traditional data literacy curriculum is developed, approved, and deployed, the tools and techniques it teaches may be obsolete. This obsolescence acceleration demands fundamentally new approaches to capability building.

Continuous Adaptation Infrastructure

Future organizations will build what we might call "learning ecosystems"—environments where skill development happens continuously and contextually:

Just-in-Time Micro-Credentials: Instead of comprehensive certifications, professionals will earn micro-credentials for specific capabilities as needed. Need to analyze customer churn? Complete a 20-minute module, apply it immediately, and receive verification of competency.

AI-Powered Personal Tutors: Every professional will have access to AI tutors that understand their current capabilities, upcoming challenges, and optimal learning styles. These tutors will provide personalized guidance moments before it's needed.

Peer Learning Networks: AI will match professionals facing similar challenges, creating dynamic learning cohorts that form, share knowledge, and dissolve as needs change. The social dimension of learning scales infinitely.

Case Study: The Investment Bank's Living Curriculum

A global investment bank abandoned traditional data literacy training in favor of what they call a "living curriculum." Here's how it works:

- Challenge Detection: AI monitors the questions analysts ask and the errors they make, identifying skill gaps in real-time

- Micro-Module Generation: AI creates targeted 5-minute learning modules addressing specific gaps

- Contextual Delivery: Learning content appears within workflow at the moment of need

- Peer Connection: Analysts struggling with similar concepts are connected for collaborative learning

- Continuous Evolution: The curriculum evolves daily based on new tools, techniques, and use cases

Results after 18 months:

- 90% reduction in time-to-competency for new analytical techniques

- 75% increase in advanced analytics adoption

- 60% decrease in analytical errors

- 95% employee satisfaction with learning approach

The key insight: Learning became invisible, happening within work rather than separate from it.

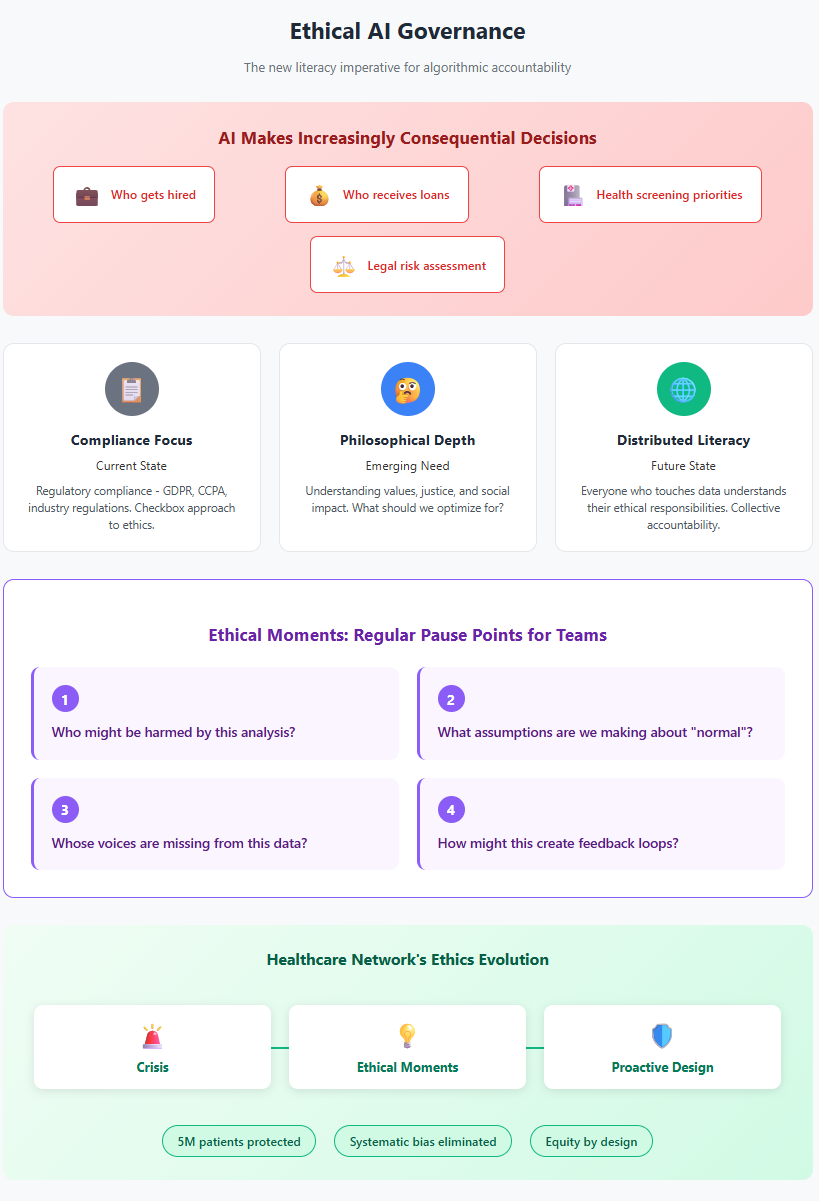

Trend 5: Ethical AI Governance - The New Literacy Imperative

The Stakes Keep Rising

As AI systems make increasingly consequential decisions—who gets hired, who receives loans, who undergoes additional health screening—the ability to question and govern these systems becomes critical. Data literacy must expand to include what we might call "algorithmic accountability literacy."

This isn't just about fairness metrics and bias detection, though these matter. It's about understanding the deeper philosophical questions: What values are encoded in our systems? Who benefits from particular analytical approaches? What types of knowledge are privileged or excluded?

The Governance Evolution

From Compliance to Philosophy: Current governance focuses on regulatory compliance—GDPR, CCPA, industry regulations. Future governance will require philosophical sophistication about values, justice, and social impact.

From Technical to Sociotechnical: Understanding algorithms isn't enough. We need literacy about how technical systems interact with social systems, creating feedback loops that can amplify inequalities or create new forms of discrimination.

From Individual to Collective: Ethical AI governance can't be the responsibility of a single ethics board. It requires distributed literacy—everyone who touches data needs to understand their ethical responsibilities.

Practical Implementation: The Healthcare Network's Ethics Evolution

A healthcare network treating 5 million patients annually faced a crisis when their AI scheduling system was found to systematically disadvantage certain communities. The technical fix was straightforward. The cultural transformation was not.

They implemented what they call "Ethical Moments"—regular pause points where teams ask:

- Who might be harmed by this analysis?

- What assumptions are we making about "normal"?

- Whose voices are missing from this data?

- How might this create feedback loops?

Every data interaction became an opportunity for ethical reflection. Within two years, they went from reactive fixes to proactive design for equity. The key was making ethics practical, not theoretical.

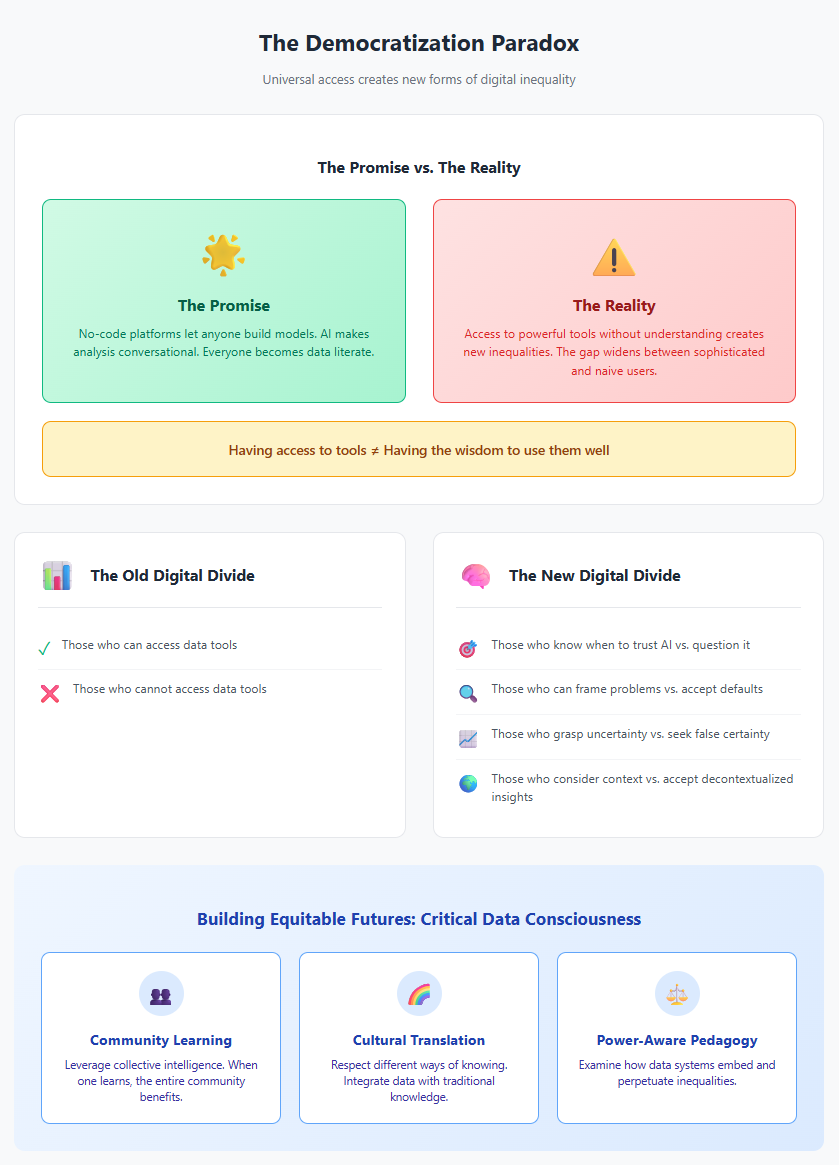

Trend 6: The Democratization Paradox

Universal Access, Unequal Outcomes

Emerging technologies promise to democratize data analysis. No-code platforms let anyone build models. AI assistants make analysis conversational. Automated insights deliver findings without human intervention. In theory, everyone becomes data literate.

In practice, we're seeing what researchers call the "democratization paradox"—as tools become more accessible, the gap between sophisticated and naive users widens. Having access to powerful tools without understanding their limitations creates new forms of digital inequality.

The New Digital Divide

The divide is no longer between those who can and cannot access data tools. It's between:

- Those who understand when to trust AI and when to question it

- Those who can frame problems effectively versus those who accept default framings

- Those who grasp uncertainty and probability versus those who seek false certainty

- Those who consider context versus those who accept decontextualized insights

Building Equitable Futures

Organizations serious about equitable data literacy must go beyond tool access to build what we might call "critical data consciousness":

Community-Centered Learning: Instead of individual skill building, create community learning approaches that leverage collective intelligence. When one person learns to question an algorithm's assumptions, the entire community benefits.

Cultural Translation: Develop data literacy approaches that respect different ways of knowing. Indigenous communities might integrate data insights with traditional knowledge. Artist communities might approach data visualization as creative expression.

Power-Aware Pedagogy: Acknowledge that data literacy isn't neutral—it can reinforce or challenge existing power structures. Teaching must include critical examination of how data systems embed and perpetuate inequalities.

The Organizational Transformation Imperative

From Data-Driven to Intelligence-Orchestrated

The organizations that thrive in this emerging landscape won't be those with the most data or the best algorithms. They'll be those that most effectively orchestrate human and machine intelligence toward meaningful outcomes.

This requires fundamental organizational redesign:

Fluid Team Structures: As AI handles routine analysis, human teams will form dynamically around complex problems requiring judgment, creativity, and contextual understanding. Organization charts give way to problem-solving networks.

Continuous Experimentation Culture: With AI enabling rapid hypothesis testing, organizations must become comfortable with constant experimentation. Failure becomes data, and learning accelerates exponentially.

Values-Embedded Operations: As systems become more autonomous, values must be explicitly encoded rather than implicitly assumed. Every algorithm embodies choices about what matters.

The New C-Suite Capabilities

Leadership in this environment requires new literacies:

Chief Intelligence Officers: Beyond data, leaders must orchestrate multiple forms of intelligence—human, artificial, collective. This role combines technical sophistication with philosophical depth.

Algorithmic Auditors: Every organization needs leaders who can interrogate AI systems, understanding not just what they do but what values they embed and what biases they perpetuate.

Learning Architects: As continuous learning becomes survival, organizations need leaders who design learning ecosystems rather than training programs.

Preparing for the Unknown: Building Adaptive Capacity

The Certainty of Uncertainty

If the past decade has taught us anything, it's that specific predictions about technology usually miss the mark. Who predicted that large language models would emerge from trying to predict the next word in a sentence? Who foresaw that data literacy would become dinner table conversation during a pandemic?

Rather than predicting specific futures, organizations should build what complexity scientists call "adaptive capacity"—the ability to respond effectively to whatever emerges.

The Meta-Principles for Future-Readiness

Philosophical Sophistication Over Technical Specificity: The technical tools will change. The philosophical questions—What can we know? How should we decide? What do we value?—remain constant. Organizations that ground data literacy in philosophical foundations will adapt more readily than those focused on specific technologies.

Learning How to Learn: The most important capability is the ability to rapidly acquire new capabilities. Organizations should measure not what people know but how quickly they can learn what they need to know.

Ethical Reflexivity: As systems become more powerful, the ability to step back and ask "Should we?" becomes as important as "Can we?" Building ethical reflection into organizational DNA creates resilience against unforeseen consequences.

Human-Centered Design: Whatever technologies emerge, humans will remain at the center. Organizations that maintain focus on human needs, capabilities, and values will navigate technological change more successfully than those that chase technical capabilities.

The Path Forward: Recommendations for Leaders

Immediate Actions (Next 6 Months)

- Audit Current AI Readiness: Assess not just technical infrastructure but organizational readiness for human-AI collaboration. Where are people excited? Where are they fearful? What myths need addressing?

- Pilot Conversational Analytics: Begin experimenting with LLM-based analytics tools in low-stakes environments. Learn how your organization naturally interacts with conversational AI.

- Establish Ethical Frameworks: Before AI becomes pervasive, establish clear ethical guidelines. What decisions will always require human judgment? What values must all systems respect?

- Create Learning Labs: Designate spaces—physical or virtual—where people can experiment with emerging technologies without pressure to produce immediate results.

Medium-Term Initiatives (6-18 Months)

- Redesign Decision Processes: Map critical decisions and redesign them for human-AI collaboration. Where can AI augment human judgment? Where must humans retain control?

- Build Algorithmic Literacy Programs: Move beyond traditional data literacy to include algorithmic intuition, prompt engineering, and AI collaboration skills.

- Develop Governance Structures: Create cross-functional teams responsible for AI governance, including technical, ethical, and business perspectives.

- Invest in Continuous Learning Infrastructure: Begin transitioning from periodic training to continuous learning ecosystems.

Long-Term Transformation (18+ Months)

- Reimagine Organizational Structure: As AI handles routine analysis, restructure around complex problem-solving and creative work that requires human judgment.

- Cultivate Philosophical Leadership: Develop leaders who combine technical sophistication with philosophical depth, capable of navigating ethical complexities.

- Build Adaptive Capacity: Create organizational cultures that thrive on change rather than resist it, measuring success by adaptability rather than efficiency.

- Foster Human-AI Symbiosis: Move beyond thinking of AI as a tool to considering it a collaborator, designing systems that amplify both human and machine capabilities.

Conclusion: The Enduring Human Element

As we stand on the brink of this transformation, it's tempting to be swept away by technological possibilities or paralyzed by their implications. But the core truth remains: data literacy, in whatever form it takes, is fundamentally about helping humans make better decisions in complex environments.

The tools will evolve. Conversational interfaces will replace dashboards. AI will handle analyses that today require teams of specialists. Ambient analytics will make data collection invisible. But the need for human judgment, creativity, and wisdom will only grow.

The organizations that thrive won't be those with the most advanced AI or the largest datasets. They'll be those that most effectively combine human insight with machine intelligence, that maintain philosophical sophistication while embracing technological capability, that remember that all data ultimately serves human purposes.

The future of data literacy isn't about making everyone a data scientist. It's about helping everyone become a more thoughtful, capable partner in the dance between human wisdom and artificial intelligence. It's about maintaining our humanity while amplifying our capabilities. It's about asking not just "What can we know?" but "What should we do with what we know?"

This is the challenge and the opportunity before us. The technical barriers to data analysis are crumbling. The philosophical challenges are just beginning. Organizations that recognize this—that invest in human judgment as much as artificial intelligence, that cultivate wisdom alongside capability—will shape the future rather than be shaped by it.

The journey we've traced through this series—from philosophical foundations through practical implementation, real-world cases, and obstacle navigation to future possibilities—reveals a consistent theme: successful data literacy transformation requires both technical capability and human wisdom. As we enter an age of ambient intelligence and human-AI collaboration, this balance becomes even more critical.

The future belongs to organizations that can orchestrate intelligence in all its forms toward purposes that matter. The question isn't whether to embrace this future—it's how to shape it in ways that amplify the best of human capability while mitigating the risks of algorithmic authority.

The path forward is clear: invest in human judgment, build ethical frameworks, create adaptive cultures, and never forget that behind every dataset, algorithm, and insight is a human question seeking a meaningful answer. This is the future of data literacy—not the replacement of human intelligence but its augmentation and elevation.

This concludes our five-part series on data literacy transformation. The journey from philosophical foundations to future possibilities reveals that building data literacy isn't just an organizational capability—it's an essential element of thriving in our increasingly complex world. The organizations that commit to this journey, with all its challenges and opportunities, will be those that shape the future rather than merely adapt to it.