From Philosophy to Practice: Implementing Data Literacy Programs That Actually Work

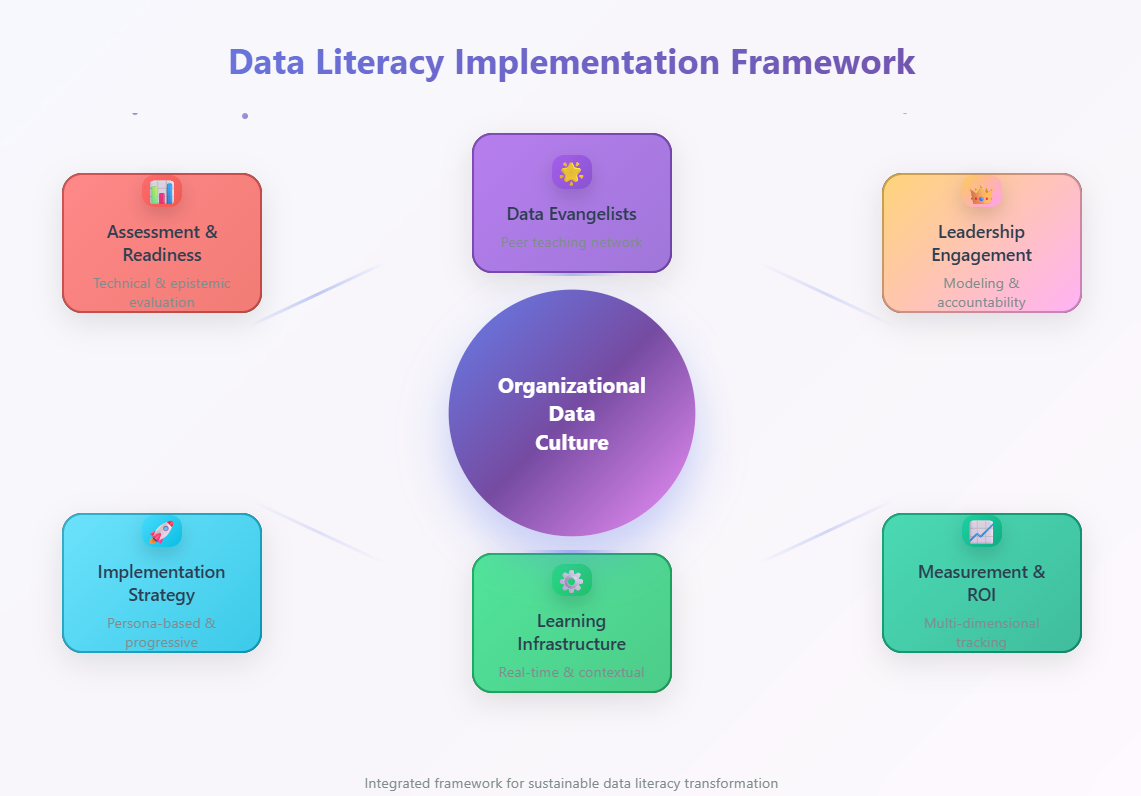

The Bottom Line Up Front: Building effective data literacy programs isn't about deploying training modules—it's about orchestrating organizational transformation that bridges the gap between philosophical understanding and practical application. The most successful implementations combine rigorous assessment, strategic leadership engagement, real-time learning infrastructure, and sophisticated measurement approaches that go far beyond traditional training metrics.

The Implementation Paradox: Why Most Data Literacy Programs Fail

We have a curious situation in the data literacy space. Organizations universally recognize its importance—84% of businesses see data literacy as a core competency over the next five years, according to research from DataCamp. Yet as The Decision Lab research reveals, "only 21% were confident in their data literacy skills" among more than 9,000 employees surveyed by Accenture.

This gap between aspiration and achievement isn't about lack of commitment or resources. It's about fundamental misunderstanding of what implementation really requires. As Elizabeth Reinhart, Data & AI Analytics Capability Building Senior Manager at Allianz, puts it: "A data literacy program is about developing skills and driving a cultural shift toward a data-informed mindset. This process requires continuous communication and engagement."

The problem is that most organizations approach data literacy implementation like they would any other training initiative—develop content, deliver it to employees, measure completion rates, and declare success. But data literacy isn't just another skill set. It's what the MIT Sloan Management Review describes as requiring people "to be verbally literate, numerically literate, and graphically literate" simultaneously—a challenging combination that "haven't always been taught together."

This complexity demands what we might call "philosophical implementation"—approaches that honor the deeper epistemological foundations we explored in our previous post while creating practical pathways for organizational change.

The Foundation: Assessment and Organizational Readiness

Before any organization can build effective data literacy capabilities, it must understand where it currently stands. This goes far beyond asking people to rate their comfort with spreadsheets on a 1-10 scale. Effective assessment requires what Info-Tech Research Group calls "understanding your organization's data maturity and role centricity" as "a prerequisite to prioritize your learning outcomes."

The Four-Dimensional Assessment Framework

The most sophisticated assessment approaches use what researchers call the "four dimensions" of data literacy. As outlined by MIT professors Catherine D'Ignazio and Rahul Bhargava, these are the ability to: read data (understanding what data represents), work with data (creating, acquiring, cleaning, and managing it), analyze data (filtering, sorting, aggregating, and performing analytic operations), and argue with data (using data to support narratives and communicate messages).

But here's where the philosophical grounding becomes crucial. Traditional assessments focus on technical competencies—can someone create a pivot table, interpret a correlation coefficient, or build a basic visualization? These are important, but they miss the deeper epistemic dimensions that determine whether someone will actually use data effectively in decision-making contexts.

Reading Data: Beyond Basic Interpretation

Effective data reading assessment must evaluate both technical comprehension and philosophical sophistication. Sample assessment questions might include:

Technical Level: "Looking at this chart showing customer satisfaction scores over time, what trend do you observe?"

Epistemic Level: "What assumptions about data collection methods would you want to verify before drawing conclusions from this trend? What factors not captured in this data might explain the pattern you're seeing?"

The difference is crucial. The first question tests chart-reading skills. The second tests empirical thinking—the kind of skeptical inquiry that distinguishes sophisticated data consumers from those who simply process information without considering its limitations.

Working with Data: Process and Philosophy

Assessment of data work capabilities should evaluate not just technical proficiency but understanding of the epistemological principles underlying good data practice. This means asking questions like:

Technical Level: "How would you clean this dataset that contains missing values and obvious errors?"

Epistemic Level: "What philosophical assumptions are you making when you decide to exclude certain data points as 'errors'? How might those decisions bias your subsequent analysis?"

These questions probe whether someone understands that data work isn't neutral technical activity but involves interpretive choices that can significantly impact conclusions.

Analyzing Data: Method and Meaning

Data analysis assessment must go beyond testing statistical knowledge to evaluate whether people understand the relationship between analytical methods and the kinds of questions they can legitimately answer.

Technical Level: "What statistical test would you use to determine if there's a significant difference between these two groups?"

Epistemic Level: "What does 'statistical significance' actually tell us about the practical importance of the difference you've found? What would you need to know to determine whether this finding should influence business decisions?"

Arguing with Data: Rhetoric and Responsibility

Perhaps most importantly, assessment must evaluate whether people can use data responsibly in persuasive contexts—understanding both the power and the ethical obligations involved in data-driven argumentation.

Technical Level: "Create a visualization that effectively communicates the key findings from this analysis."

Epistemic Level: "How would you present these findings to different audiences with potentially conflicting interests? What ethical obligations do you have when using data to support a particular recommendation?"

Organizational Maturity Assessment

Beyond individual capabilities, effective assessment must evaluate organizational readiness for data literacy transformation. This involves what we might call "institutional epistemology"—how the organization currently approaches evidence, decision-making, and learning.

Culture Assessment: Does the organization reward empirical thinking or political maneuvering? Are people comfortable acknowledging uncertainty and changing their minds when presented with contradictory evidence? Do leaders model the kind of analytical thinking they expect from others?

Process Assessment: Are decision-making processes designed to surface and test assumptions? Do meetings include systematic consideration of data quality and analytical limitations? Are there mechanisms for learning from decisions that don't work out as expected?

Infrastructure Assessment: Do people have access to the data they need to make good decisions? Are analytical tools usable by non-specialists? Is there support available when people encounter analytical challenges they can't solve independently?

Incentive Assessment: Are people rewarded for making data-informed decisions or for achieving predetermined outcomes regardless of method? Do promotion criteria include analytical thinking capabilities? Are there consequences for ignoring relevant data in important decisions?

Implementation Frameworks: From Assessment to Action

Once an organization understands its current state, it needs systematic approaches for building data literacy capabilities. Effective implementation requires what we might call "architectural thinking"—designing learning systems that can evolve and scale rather than simply delivering training content.

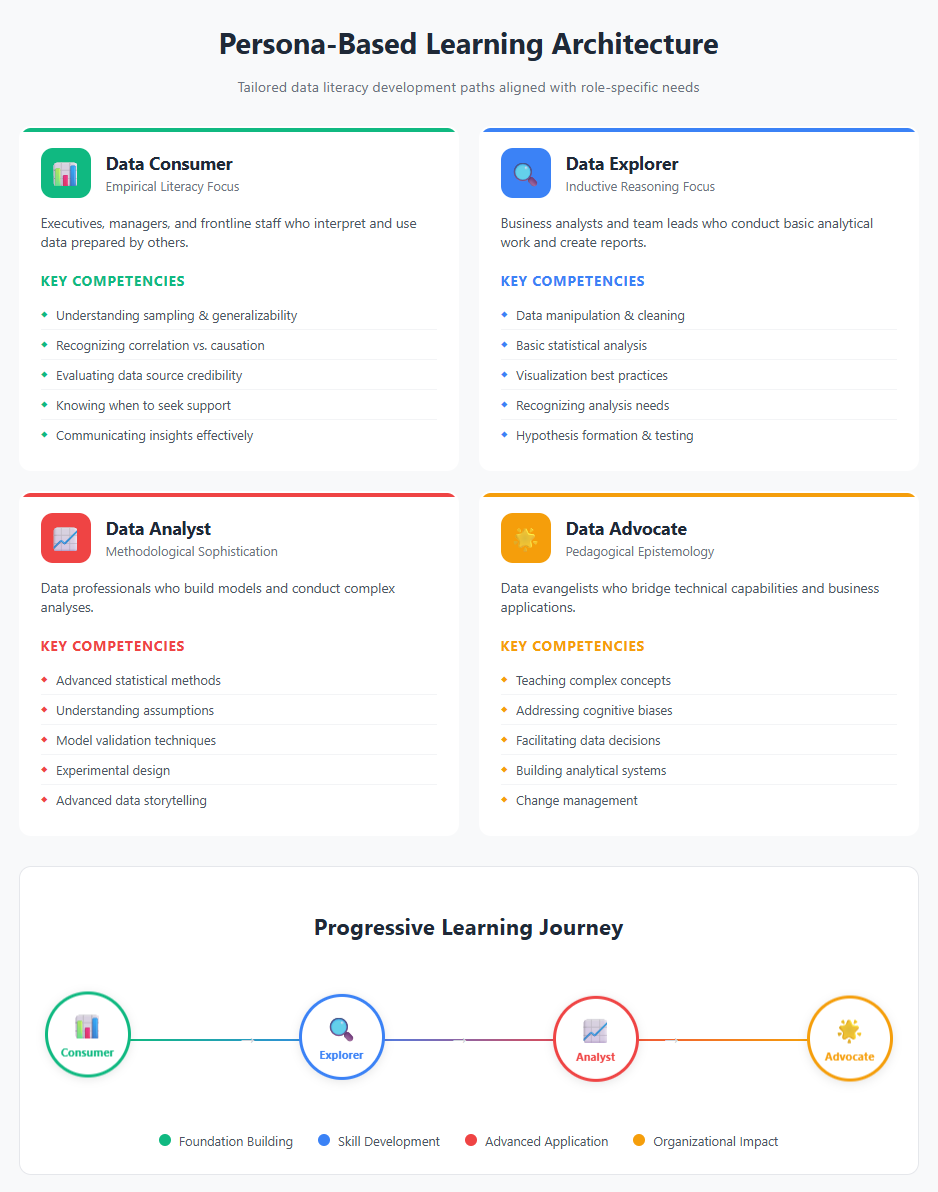

The Persona-Based Learning Architecture

Rather than treating all employees identically, sophisticated implementation approaches recognize that different roles require different types of data literacy. As Emily Hayward, Data & Digital Change Manager at CBRE, explains on the DataFramed podcast, "data persona work helps anyone managing an upskilling program can evangelize their learning program" by creating "tailored learning paths and align learning objectives within business outcomes."

The Data Consumer Persona: These are employees who need to interpret and use data prepared by others but don't typically create analytical work themselves. Think executives reviewing dashboard reports or customer service representatives using data to inform their interactions. Their learning needs focus on developing what we might call "empirical literacy"—the ability to ask good questions about data quality, understand uncertainty, and avoid common interpretation errors.

Key competencies include:

- Understanding sampling and its implications for generalizability

- Recognizing correlation vs. causation issues

- Evaluating data source credibility and potential biases

- Knowing when to seek additional analytical support

- Communicating data-driven insights to non-technical audiences

The Data Explorer Persona: These employees need to conduct basic analytical work themselves—creating simple reports, exploring datasets to answer business questions, and identifying patterns that might require deeper investigation. Their learning needs combine technical skills with what philosophers call "inductive reasoning" capabilities.

Key competencies include:

- Data manipulation and cleaning techniques

- Basic statistical analysis and interpretation

- Visualization best practices for different audiences

- Understanding when more sophisticated analysis is needed

- Hypothesis formation and testing approaches

The Data Analyst Persona: These are employees whose roles involve substantial analytical work—building models, conducting complex analyses, and providing analytical support to others. Their learning needs emphasize what we might call "methodological sophistication" combined with the communication skills to translate analytical insights for non-technical colleagues.

Key competencies include:

- Advanced statistical methods and their appropriate applications

- Understanding assumptions underlying different analytical approaches

- Model validation and testing techniques

- Experimental design principles

- Advanced data visualization and storytelling

The Data Advocate Persona: These are the data evangelists we discussed earlier—people who serve as bridges between technical capabilities and business applications. Their learning needs focus on what we might call "pedagogical epistemology"—understanding how to help others develop more sophisticated analytical thinking.

Key competencies include:

- Teaching analytical concepts to non-technical audiences

- Recognizing and addressing common cognitive biases

- Facilitating data-driven decision-making processes

- Building organizational systems that support good analytical thinking

- Change management strategies for analytical transformation

The Progressive Implementation Model

Effective data literacy implementation follows what we might call "developmental epistemology"—recognizing that analytical sophistication develops through stages that can't be skipped or rushed.

Phase 1: Foundation Building (Months 1-6)

The first phase focuses on what Julie Beynon, Head of Data at Clearbit, calls the fundamental prerequisite: "Clean data is my first ROI." Before people can develop sophisticated analytical thinking, they need access to data they can trust.

This phase involves:

- Data quality assessment and improvement initiatives

- Basic tool training focused on data access and manipulation

- Introduction to fundamental concepts of uncertainty and bias

- Creation of data dictionaries and documentation that make data sources understandable

- Establishment of feedback mechanisms for data quality issues

The philosophical emphasis during this phase is on developing what we might call "empirical humility"—understanding that all data has limitations and that good analysis begins with understanding those limitations.

Phase 2: Skill Development (Months 6-18)

The second phase focuses on building the technical and conceptual capabilities people need to work effectively with data in their roles. This is where the persona-based learning paths become most important.

This phase involves:

- Role-specific analytical training aligned with actual job requirements

- Practice with real organizational data and genuine business problems

- Introduction to more sophisticated concepts about causation, sampling, and statistical inference

- Development of data storytelling and communication capabilities

- Creation of communities of practice around specific analytical challenges

The philosophical emphasis during this phase is on developing what we might call "methodological awareness"—understanding how different analytical approaches answer different kinds of questions and being able to choose appropriate methods for specific problems.

Phase 3: Integration and Advanced Application (Months 18+)

The third phase focuses on embedding analytical thinking into organizational processes and developing advanced capabilities among those who need them.

This phase involves:

- Integration of data-driven decision-making into formal organizational processes

- Advanced training for employees in analyst and advocate roles

- Development of internal teaching and mentoring capabilities

- Creation of systematic approaches for learning from decisions and improving analytical practices over time

- Establishment of feedback loops that help the organization become more sophisticated in its use of data

The philosophical emphasis during this phase is on developing what we might call "institutional empiricism"—creating organizational systems and cultures that consistently reward good analytical thinking and support evidence-based decision-making.

Building Leadership Engagement: The Cultural Transformation Imperative

Perhaps the most critical insight from successful data literacy implementations is that they require what The Data Literacy Project calls "leadership from the top" combined with "a groundswell of engagement from every member of the team." This isn't the typical corporate training dynamic where senior leaders delegate skill development to HR and learning teams.

What Authentic Leadership Engagement Actually Looks Like

Modeling Empirical Thinking in Decision-Making

Authentic leadership engagement means demonstrating the kind of analytical thinking the organization wants to cultivate. This goes far beyond executives occasionally referencing data in presentations. It means systematically modeling what we might call "epistemic leadership"—showing others how to think carefully about evidence and uncertainty.

Effective leaders regularly ask questions like: "What assumptions are we making in this analysis?" "What would we need to see to change our minds about this strategy?" "What are the most significant uncertainties in these projections?" "How might we be wrong about this?"

These questions do more than elicit information—they demonstrate analytical habits of mind that others can observe and adopt. When a CEO consistently asks about data quality and analytical assumptions, it sends a powerful message about what kind of thinking is valued in the organization.

Creating Analytical Accountability

Leadership engagement also means creating organizational systems that reward empirical thinking and hold people accountable for analytical rigor. This involves changes to performance management, promotion criteria, and resource allocation processes.

For example, some organizations have begun including "analytical thinking" as a specific competency in job descriptions and performance reviews. Others have created decision-making processes that require explicit consideration of data quality and analytical limitations before major choices are finalized.

The key insight is that cultural change requires what organizational theorists call "structural reinforcement"—changing the systems and incentives that shape behavior, not just exhorting people to think differently.

Philosophical Leadership: The Deeper Transformation

The most sophisticated leadership engagement involves what we might call "philosophical leadership"—helping the organization grapple with deeper questions about knowledge, evidence, and decision-making that determine how data literacy efforts will ultimately succeed or fail.

This means addressing questions like: How comfortable is the organization with uncertainty and ambiguity? Do people feel safe acknowledging when they don't know something or when their previous assumptions were wrong? Are there cultural factors that make it difficult for people to challenge conventional wisdom with data?

These philosophical dimensions often determine whether data literacy initiatives create genuine transformation or simply add a layer of analytical vocabulary to existing decision-making patterns.

Overcoming Resistance Through Philosophical Understanding

Resistance to data literacy initiatives often reflects deeper philosophical disagreements about the nature of knowledge and decision-making. Some people believe strongly in intuition and experience as guides to decision-making. Others worry that emphasis on data will reduce human judgment to mechanical calculation.

Effective leadership engagement addresses these concerns directly rather than dismissing them as "resistance to change." It involves helping people understand how empirical thinking enhances rather than replaces human judgment, and how analytical rigor can make intuitive insights more reliable rather than eliminating them.

This requires what we might call "philosophical diplomacy"—the ability to help people see connections between their existing values and analytical thinking rather than positioning them as competitors. For example, someone who values customer relationships might be shown how data analysis can help them better understand customer needs. Someone who prides themselves on operational excellence might learn how analytical thinking can help them identify improvement opportunities they might otherwise miss.

The Change Management Philosophy: Transforming How Organizations Think

Implementing data literacy at scale requires understanding that you're not just teaching new skills—you're attempting to change fundamental patterns of organizational thinking that may have been established over decades. This requires what we might call "epistemological change management"—approaches that recognize the deep cognitive and cultural shifts involved in becoming more empirically sophisticated.

Understanding Cognitive Resistance

People resist data literacy initiatives for reasons that go far beyond discomfort with technology. Often, resistance reflects well-founded concerns about autonomy, expertise, and professional identity. Someone who has been successful making decisions based on experience and intuition may legitimately worry that emphasis on data analysis will devalue their contributions or expose them to criticism.

Effective change management addresses these concerns by positioning data literacy as enhancing existing capabilities rather than replacing them. The goal is to help people see analytical thinking as a tool that can make their experiential knowledge more powerful rather than obsolete.

The Philosophy of Incremental Empiricism

Rather than demanding immediate conversion to "data-driven decision making," effective implementation strategies recognize that analytical sophistication develops gradually. People need opportunities to experience the value of more empirical approaches in low-stakes situations before they'll adopt them for high-stakes decisions.

This might involve starting with analytical approaches to problems where people already feel uncertain rather than areas where they have strong existing opinions. It means celebrating incremental improvements in analytical thinking rather than holding out for dramatic transformations.

Creating Learning-Safe Environments

Perhaps most importantly, effective change management creates what we might call "epistemic safety"—environments where people feel comfortable acknowledging uncertainty, admitting mistakes, and changing their minds when presented with contrary evidence.

Creating Data Evangelists: The Multiplier Effect

One of the most important insights from successful data literacy implementations is that sustainable change requires what Qlik's Data Literacy Program calls "evangelists" who can "mentor others in helping your organization lead with data." These aren't necessarily the most technically sophisticated data practitioners—they're people who combine solid analytical thinking with communication skills and organizational credibility.

The Philosophy of Peer Learning

The evangelist model works because it addresses a fundamental challenge in data literacy development: the gap between technical knowledge and practical application. As DataCamp research shows, effective programs require "personalize the learning experience based on the needs of the individual" rather than "one-size-fits-all approach to data literacy."

But the deeper insight is that learning analytical thinking is fundamentally social. People don't just need to understand statistical concepts in abstract—they need to see how analytical thinking applies to the specific problems they face in their roles, and they need feedback on their own analytical reasoning from people who understand their context.

Data evangelists serve as translators between the philosophical foundations of good data thinking and the specific challenges people face in their roles. They can help a marketing manager understand why sample size matters for A/B testing, or help a product manager think through the causal assumptions underlying customer behavior analysis.

Building Analytical Teaching Capabilities

Creating effective evangelists requires more than identifying enthusiastic volunteers. It requires what we might call "pedagogical training"—helping them understand how to teach empirical thinking effectively.

Understanding Common Cognitive Biases

Effective data evangelists understand the psychological barriers that prevent people from thinking analytically. This includes classic biases like confirmation bias (seeking information that confirms existing beliefs) and availability bias (overweighting easily recalled examples), but also more subtle issues like the tendency to confuse correlation with causation or to assume that more data always means better decisions.

They learn to recognize these patterns in others' thinking and develop strategies for helping people overcome them without making them feel criticized or defensive.

Developing Epistemic Empathy

Perhaps most importantly, data evangelists develop what we might call "epistemic empathy"—the ability to understand how the world looks from the perspective of someone with different analytical background and experience. This helps them bridge the gap between technical concepts and practical applications in ways that feel relevant and accessible.

Creating Teaching Moments

The most effective data evangelists become skilled at recognizing and creating "teachable moments"—situations where people are naturally curious about analytical questions and therefore receptive to learning new concepts. Rather than formal training sessions, much of their impact comes through informal conversations, meeting contributions, and project collaborations where they can model analytical thinking and provide gentle coaching.

The Network Effect: How Learning Spreads

Data evangelists create what network theorists call "positive contagion"—beneficial behaviors and mindsets that spread through organizational relationships. Understanding how this process works helps organizations design more effective implementation strategies.

Hub-and-Spoke vs. Distributed Models

Traditional training approaches often use hub-and-spoke models where a central team provides training to everyone else. Data evangelist approaches create more distributed learning networks where multiple people throughout the organization can provide guidance and support.

This is more resilient because it doesn't depend on a small number of experts being available to everyone who needs help. It's also more effective because evangelists understand the specific contexts and challenges faced by people in different roles and departments.

Creating Learning Momentum

As more people develop analytical capabilities, they can support others in their development, creating positive feedback loops. Someone who was a student last month becomes a teacher this month, helping someone else develop the same capabilities they just acquired.

This peer teaching is often more effective than expert instruction because the teacher recently went through the same learning process and understands common points of confusion and practical applications.

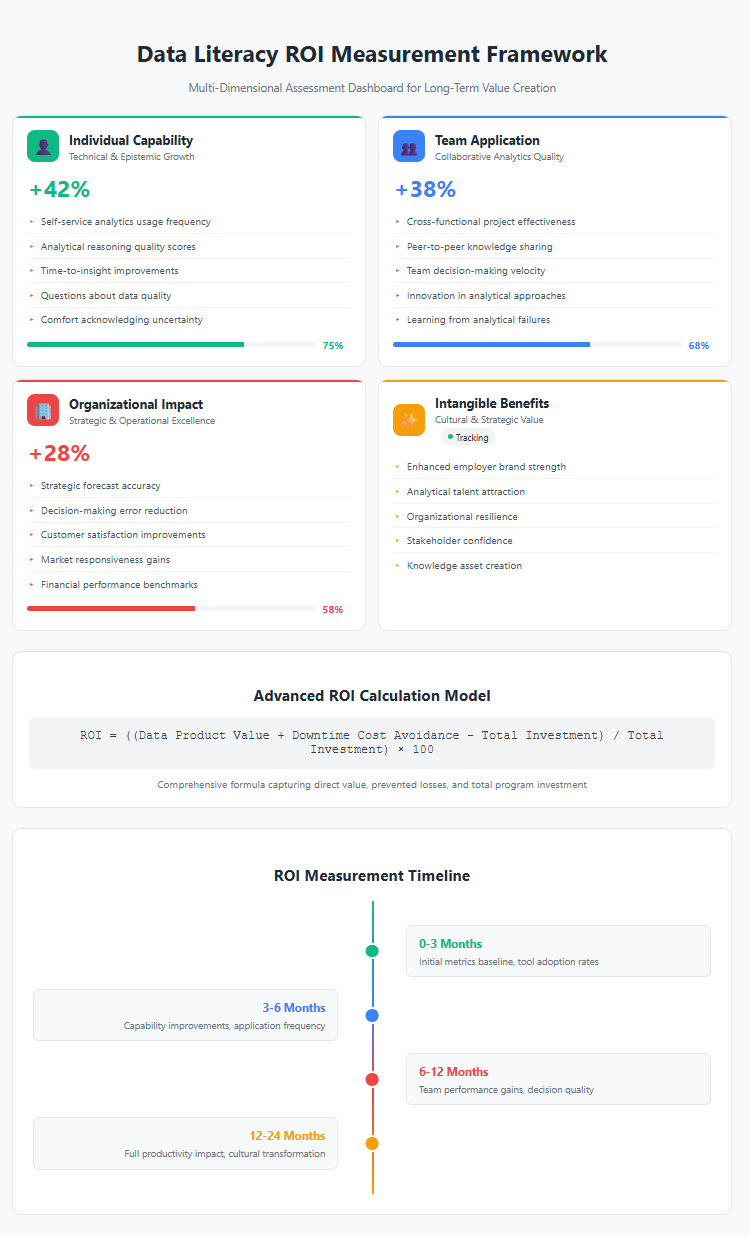

Measurement and ROI: Beyond Training Metrics

Traditional training evaluation focuses on what's called the "Kirkpatrick Model"—measuring reaction (did people like the training?), learning (did they acquire new knowledge?), behavior (are they applying what they learned?), and results (is it impacting business outcomes?). These dimensions remain relevant for data literacy programs, but they require much more sophisticated measurement approaches.

The Philosophy of Long-Term Assessment

As Data Society research reveals, "the return on investment for data and AI training programs is ultimately measured via productivity. You typically need a full year of data to determine effectiveness, and the real ROI can be measured over 12 to 24 months." This timeline reflects the reality that data literacy involves what cognitive scientists call "cognitive restructuring"—changing how people think about evidence and decision-making, not just acquiring new technical skills.

This extended timeline requires what we might call "developmental measurement"—approaches that track progression over time rather than looking for immediate improvements.

The Multi-Dimensional ROI Framework

Effective measurement approaches track multiple dimensions simultaneously, recognizing that data literacy transformation involves changes at individual, team, and organizational levels. The most sophisticated organizations use what we might call a "360-degree assessment model" that captures both quantitative metrics and qualitative transformations.

Individual Capability Metrics

These assess whether people are developing the technical skills to work with data effectively:

Technical Proficiency Indicators:

- Frequency of self-service analytics usage (tracked through platform analytics)

- Complexity of analyses attempted without support (beginner/intermediate/advanced)

- Time-to-insight metrics for common analytical tasks

- Error rates in data interpretation and analysis

- Tool adoption rates across different analytical platforms

Analytical Reasoning Quality:

- Assessment scores on standardized data literacy evaluations

- Quality ratings of analytical reports and presentations

- Peer feedback on analytical contributions to projects

- Accuracy of predictions and recommendations in tracked decisions

- Improvement in analytical thinking as measured through scenario-based assessments

Individual Epistemic Metrics

These evaluate whether people are developing the philosophical sophistication that underlies effective data use:

Empirical Sophistication Indicators:

- Frequency of questions about data quality and methodology in meetings

- Quality of assumptions identification in analytical work

- Comfort level with acknowledging uncertainty (measured through surveys and behavioral observation)

- Tendency to seek disconfirming evidence (tracked through analysis review processes)

- Improvement in causal reasoning as measured through specialized assessments

Decision-Making Enhancement:

- Time between data availability and decision implementation

- Quality of decision rationale documentation

- Frequency of decision reversals based on new evidence

- Stakeholder satisfaction with data-driven recommendations

- Long-term accuracy of decisions tracked through outcome monitoring

Team Application Metrics

These measure whether improved individual capabilities are translating into better team performance:

Collaborative Analytics Quality:

- Effectiveness of cross-functional analytical projects (measured through project outcome assessment)

- Quality of data discussions in team meetings (evaluated through structured observation)

- Speed of team decision-making on data-related issues

- Frequency and quality of peer-to-peer analytical knowledge sharing

- Innovation rate in analytical approaches within teams

Organizational Learning Velocity:

- Time required for teams to adopt new analytical techniques

- Effectiveness of teams in learning from analytical failures

- Quality of analytical process improvement initiatives

- Cross-team knowledge transfer effectiveness

- Team resilience in handling analytical challenges

Organizational Impact Metrics

These track whether improved data literacy is translating into better business outcomes:

Strategic Decision Quality:

- Accuracy of strategic forecasts and planning assumptions

- Speed of strategic decision-making processes

- Quality of risk identification and mitigation strategies

- Effectiveness of resource allocation decisions

- Innovation rate in business models and processes

Operational Excellence:

- Reduction in decision-making errors with measurable costs

- Improvement in customer satisfaction attributed to data-driven initiatives

- Employee engagement scores related to empowerment and analytical capability

- Market responsiveness and competitive positioning improvements

- Financial performance relative to industry benchmarks

Advanced ROI Calculation Methodologies

The most sophisticated approaches to data literacy ROI move beyond simple cost-benefit calculations to capture the full spectrum of value creation. These methodologies recognize that data literacy generates value through multiple channels that often compound over time.

The Data Product Value Formula

Drawing from research by Svitla Systems, effective ROI calculation should use a comprehensive formula:

ROI = ((Data Product Value + Downtime Cost Avoidance - Total Investment) / Total Investment) × 100

Data Product Value includes:

- Revenue generated from data-driven products or services

- Cost savings from improved operational efficiency

- Value of better strategic decisions (measured through outcome tracking)

- Customer satisfaction improvements with quantifiable business impact

- Risk reduction value (calculated through probability-adjusted impact assessment)

Downtime Cost Avoidance captures:

- Productivity losses prevented through better data quality

- Decision delays avoided through improved analytical capabilities

- Strategic mistakes prevented through better empirical thinking

- Opportunity costs avoided through faster time-to-insight

- Compliance risks mitigated through better data governance

Total Investment encompasses:

- Direct training and program costs

- Technology infrastructure investments

- Time investment by employees and leadership

- Opportunity costs of alternative training approaches

- Ongoing maintenance and improvement costs

The Data Maturity ROI Model

This approach, also highlighted by Svitla Systems, "grades" organizational data maturity and associates dollar values with each level:

ROI = ((Employees × Maturity Value $) / Data & Analytics Team Cost) × 100

This methodology recognizes that data literacy creates value by improving overall organizational capabilities rather than just individual skills. Organizations track progression through maturity levels and calculate the economic value associated with each improvement.

The Productivity-First Approach

Following insights from Data Society, the most reliable ROI measurements focus on productivity improvements as the primary indicator of program success. This involves:

Labor Cost vs. Output Analysis:

- Measuring employee efficiency before and after training

- Tracking automation of routine processes and time savings

- Calculating redirection of time toward higher-value activities

- Assessing improvement in decision-making speed and accuracy

- Monitoring reduction in rework and error correction time

System Adoption and Utilization Metrics:

- Rates of AI tool adoption and effective usage

- Employee feedback on training effectiveness and applicability

- Long-term retention and application of analytical skills

- Cross-functional collaboration improvements

- Innovation in problem-solving approaches

The Intangible Benefits Framework

While quantitative measures are important, sophisticated ROI assessment also captures intangible benefits that often represent the largest source of long-term value. As Svitla Systems research emphasizes, organizations shouldn't "overlook intangible benefits" including "improved decision-making, data literacy, increased competitive advantage, better compliance, higher employee satisfaction, and excellent innovation capabilities."

Cultural Transformation Value:

- Improved organizational reputation and employer brand strength

- Enhanced ability to attract and retain analytical talent

- Increased employee engagement and job satisfaction

- Better organizational resilience and adaptability

- Stronger stakeholder confidence in decision-making processes

Strategic Capability Enhancement:

- Improved competitive positioning in data-rich markets

- Enhanced innovation capabilities and speed-to-market

- Better risk management and regulatory compliance

- Stronger customer relationships through data-driven insights

- Increased organizational learning velocity and adaptation capability

Knowledge Asset Creation:

- Development of proprietary analytical approaches and methodologies

- Creation of institutional knowledge about effective data use

- Building of analytical capabilities that compound over time

- Establishment of data-driven decision-making processes

- Development of organizational memory and learning systems

Measurement Dashboard Design and Implementation

Effective measurement requires sophisticated dashboard designs that can track multiple dimensions of progress simultaneously while remaining accessible to different stakeholder groups. The best implementations use what we might call "stakeholder-adaptive dashboards" that present the same underlying data in formats appropriate for different audiences.

Executive Dashboard Elements:

- High-level ROI summary with trend analysis

- Strategic impact metrics tied to business objectives

- Competitive positioning indicators

- Risk and opportunity identification summaries

- Investment justification and future planning insights

Program Manager Dashboard Elements:

- Detailed progression tracking across all measurement dimensions

- Individual and team performance analytics

- Resource utilization and efficiency metrics

- Problem identification and intervention planning tools

- Success story documentation and sharing capabilities

Participant Dashboard Elements:

- Personal progress tracking and goal achievement

- Peer comparison and collaboration opportunities

- Learning resource recommendations and accessibility

- Achievement recognition and milestone celebration

- Self-assessment tools and improvement planning guidance

Longitudinal Assessment and Continuous Improvement

The most sophisticated measurement approaches recognize that data literacy development is an ongoing process that requires continuous assessment and program refinement. This involves what we might call "adaptive measurement"—evolving assessment criteria and methodologies as organizational capabilities mature.

Quarterly Assessment Cycles:

- Comprehensive capability assessment using standardized instruments

- Stakeholder feedback collection and analysis

- Program effectiveness evaluation and adjustment planning

- Resource allocation optimization based on emerging needs

- Success celebration and challenge identification

Annual Strategic Reviews:

- Comprehensive ROI calculation and business impact assessment

- Competitive benchmarking and market positioning analysis

- Long-term trend analysis and future planning

- Investment justification and budget planning for subsequent years

- Strategic program evolution planning based on organizational growth

Continuous Monitoring Systems:

- Real-time usage analytics from analytical platforms and tools

- Ongoing feedback collection through embedded survey mechanisms

- Performance indicator tracking through integrated business systems

- Early warning systems for program challenges or opportunities

- Automated reporting and alert systems for key stakeholders

Common Implementation Pitfalls and Philosophical Solutions

Most data literacy implementations fail in predictable ways, and these failures often reflect deeper philosophical misunderstandings about the nature of learning and organizational change.

The "Technical Skills First" Fallacy

Many organizations begin data literacy initiatives by teaching people to use specific software tools, assuming that technical proficiency will naturally lead to better analytical thinking. This reflects what we might call "tool-first epistemology"—the mistaken belief that having analytical tools is equivalent to having analytical capabilities.

As MIT Sloan research suggests, businesses have "spent too much time training people on hard-to-use technical tools instead of emphasizing data" and should "flip it—make the technology super easy so that you can spend more time on data."

The philosophical solution involves recognizing that analytical thinking is primarily conceptual rather than technical. People need to understand concepts like sampling, causation, and uncertainty before they can use analytical tools effectively, not after.

The "Information Transfer" Misconception

Traditional training approaches assume that knowledge can be transferred from experts to novices through instruction, but analytical thinking doesn't work this way. It develops through what John Dewey called "learning by doing"—engaging with real problems and receiving feedback on the quality of analytical reasoning.

This reflects what philosophers call the "tacit knowledge problem"—much of what experts know about analytical thinking can't be made explicit in training materials. It has to be learned through experience and practice.

The philosophical solution involves creating opportunities for guided practice with real organizational problems rather than abstract exercises. People need to experience the value of analytical thinking in contexts that matter to them before they'll adopt it broadly.

The "Individual Focus" Limitation

Most data literacy programs focus on developing individual skills, but analytical thinking is often inherently collaborative. Complex problems require diverse perspectives, different types of expertise, and systematic approaches to combining insights from multiple sources.

This reflects what sociologists call "methodological individualism"—the assumption that organizational capabilities can be understood as the sum of individual capabilities. But data literacy is more like language—its value emerges through interaction and shared understanding rather than individual proficiency.

The philosophical solution involves designing learning experiences that are inherently collaborative and creating organizational systems that support effective analytical collaboration.

The Integration Imperative: Bringing It All Together

Effective data literacy implementation isn't about choosing between philosophical sophistication and practical results—it's about creating approaches that honor both dimensions simultaneously. The organizations that succeed understand that sustainable data literacy requires deep changes in how people think about evidence, decision-making, and organizational learning.

The Architecture of Empirical Organizations

The ultimate goal of data literacy implementation is creating what we might call "empirically intelligent organizations"—institutions that are systematically good at gathering evidence, reasoning about uncertainty, and learning from experience.

This requires what we might call "institutional empiricism"—embedding analytical thinking into the fundamental processes and structures that guide organizational behavior. It means creating decision-making processes that systematically surface and test assumptions, performance management systems that reward empirical thinking, and organizational cultures that treat intellectual curiosity and epistemic humility as core values.

The Philosophical Foundation of Practical Success

The organizations that achieve the most impressive practical results from data literacy initiatives are typically those that take the philosophical dimensions most seriously. They understand that sustainable change requires helping people develop new ways of thinking about knowledge, evidence, and decision-making rather than simply acquiring new technical skills.

This means building programs that combine rigorous assessment of both technical and epistemic capabilities, creating leadership engagement that models empirical sophistication, developing real-time learning infrastructure that delivers contextual guidance at teachable moments, cultivating data evangelists who can translate analytical thinking into practical guidance, and implementing measurement approaches that track both immediate skill development and longer-term cognitive sophistication.

The stakes continue to be high. As the research consistently shows, organizations with higher levels of data literacy consistently outperform their peers across multiple dimensions. But achieving these benefits requires moving beyond surface-level training approaches to create comprehensive organizational transformation.

The path forward involves recognizing that data literacy is fundamentally about developing better ways of thinking about evidence and uncertainty. When we can help people become more sophisticated empirical reasoners—not just more skilled tool users—we create the foundation for sustained competitive advantage in an increasingly data-rich world.

Infrastructure for Real-Time Learning: Building the Teachable Moment Architecture

Traditional training approaches fail because they ignore how people actually learn to work with data effectively. The insights from The Decoded Company about "teachable moments" and "real-time learning" aren't just nice theoretical concepts—they require substantial infrastructure investment to implement at scale.

Creating effective real-time learning infrastructure involves three interconnected systems: contextual guidance systems, community knowledge platforms, and embedded analytics coaching.

Contextual guidance systems provide what we might call "just-in-time epistemology"—helping people understand not just how to perform specific analytical techniques but when those techniques are appropriate and what their limitations might be. This might involve decision trees that help someone choose between different statistical approaches, or embedded guidance that explains the assumptions behind different analytical methods.

Community knowledge platforms create what researchers call "communities of practice" around data use. These aren't traditional forums or SharePoint sites, but dynamic environments where people can surface questions about specific data challenges they're facing and get responses from colleagues who have dealt with similar situations. The key insight is that much data literacy learning is inherently social—people learn best when they can see how others approach similar problems and when they can get feedback on their own analytical thinking.

Embedded analytics coaching involves building advisory capabilities directly into the tools people use for data analysis. Rather than sending someone to a separate training environment to learn about statistical significance, the analytics platform itself provides contextual guidance about when statistical tests are appropriate and how to interpret their results.

Creating Data Evangelists: The Multiplier Effect

One of the most important insights from successful data literacy implementations is that sustainable change requires what Qlik's Data Literacy Program calls "evangelists" who can "mentor others in helping your organization lead with data." These aren't necessarily the most technically sophisticated data practitioners—they're people who combine solid analytical thinking with communication skills and organizational credibility.

The evangelist model works because it addresses a fundamental challenge in data literacy development: the gap between technical knowledge and practical application. As DataCamp research shows, effective programs require "personalize the learning experience based on the needs of the individual" rather than "one-size-fits-all approach to data literacy."

Data evangelists serve as translators between the philosophical foundations of good data thinking and the specific challenges people face in their roles. They can help a marketing manager understand why sample size matters for A/B testing, or help a product manager think through the causal assumptions underlying customer behavior analysis.

But creating effective evangelists requires more than identifying enthusiastic volunteers. It requires what we might call "pedagogical training"—helping them understand how to teach empirical thinking effectively. This involves understanding common cognitive biases that interfere with good data interpretation, knowing how to help people develop what philosophers call "epistemic humility," and being able to explain complex statistical concepts in intuitive terms.

Measurement and ROI: Beyond Training Metrics

Traditional training evaluation focuses on what's called the "Kirkpatrick Model"—measuring reaction (did people like the training?), learning (did they acquire new knowledge?), behavior (are they applying what they learned?), and results (is it impacting business outcomes?). These dimensions remain relevant for data literacy programs, but they require much more sophisticated measurement approaches.

As Data Society research reveals, "the return on investment for data and AI training programs is ultimately measured via productivity. You typically need a full year of data to determine effectiveness, and the real ROI can be measured over 12 to 24 months." This timeline reflects the reality that data literacy involves what cognitive scientists call "cognitive restructuring"—changing how people think about evidence and decision-making, not just acquiring new technical skills.

Effective measurement approaches track multiple dimensions simultaneously. Capability metrics assess whether people are developing the technical skills to work with data effectively. Application metrics measure whether they're actually using these skills in their work. Quality metrics evaluate whether their analytical thinking is becoming more sophisticated. Impact metrics track whether improved data literacy is translating into better business outcomes.

But perhaps most importantly, mature measurement approaches track what we might call "epistemic indicators"—signs that people are developing the philosophical sophistication that underlies effective data use. Are they asking better questions about data sources? Are they more comfortable acknowledging uncertainty in their analyses? Are they better at recognizing the limits of what data can tell them?

Common Implementation Pitfalls and Philosophical Solutions

Most data literacy implementations fail in predictable ways, and these failures often reflect deeper philosophical misunderstandings about the nature of learning and organizational change.

The "Tool-First" Fallacy treats data literacy as primarily about mastering specific software platforms rather than developing analytical thinking capabilities. As MIT Sloan research suggests, businesses have "spent too much time training people on hard-to-use technical tools instead of emphasizing data" and should "flip it—make the technology super easy so that you can spend more time on data."

The "Front-Loading" Trap assumes people can learn data literacy concepts in abstract training environments and then apply them effectively in their actual work contexts. This ignores the fundamental insight from The Decoded Company that effective learning happens at "teachable moments" when people encounter real problems they need to solve.

The "Individual Skills" Misconception treats data literacy as something individuals possess rather than as an organizational capability that emerges through culture, processes, and systems working together. This misses the crucial point from Data Literacy in Practice that "organizational data literacy" matters more than individual competencies.

The "Quick Wins" Syndrome focuses on easily measurable short-term improvements rather than the deeper cognitive and cultural changes that determine long-term success. As CastorDoc research emphasizes, "clean data is my first ROI"—the foundation must be solid before you can expect sophisticated analytical thinking to flourish.

Each of these pitfalls reflects inadequate appreciation for the complexity of what data literacy really involves. The solutions require what we might call "philosophical implementation"—approaches that honor the deeper dimensions of learning and change while still delivering practical results.

Scaling and Sustainability: The Long-Term View

The ultimate test of any data literacy implementation is whether it creates self-sustaining momentum toward more sophisticated data use throughout the organization. This requires what organizational theorists call "institutional embedding"—making data-driven thinking so fundamental to how the organization operates that it persists even as people and priorities change.

Successful scaling involves several interconnected strategies. Progressive sophistication ensures that as people master basic data literacy concepts, they have pathways to develop more advanced capabilities. Cross-functional integration breaks down silos between technical and business teams by creating shared languages and common analytical frameworks. Continuous evolution keeps the program responsive to changing business needs and technological capabilities.

But perhaps most importantly, sustainable programs create what we might call "epistemic infrastructure"—organizational systems and cultures that consistently reward good analytical thinking and empirical curiosity. This means promoting people who demonstrate sophisticated data judgment, incorporating analytical thinking into performance reviews, and creating decision-making processes that systematically surface and address the assumptions underlying important choices.

As the authors of The Decoded Company recognize, the goal isn't just to train people to use data—it's to create "engineered ecosystems" where "technology can be a coach, personalizing processes to the individual based on experience and offering training interventions precisely at the teachable moment."

The Integration Imperative: Bringing It All Together

Effective data literacy implementation isn't about choosing between philosophical sophistication and practical results—it's about creating approaches that honor both dimensions simultaneously. The organizations that succeed understand that sustainable data literacy requires deep changes in how people think about evidence, decision-making, and organizational learning.

This means building programs that combine rigorous assessment of both technical and epistemic capabilities, creating leadership engagement that models empirical sophistication, developing real-time learning infrastructure that delivers contextual guidance at teachable moments, cultivating data evangelists who can translate analytical thinking into practical guidance, and implementing measurement approaches that track both immediate skill development and longer-term cognitive sophistication.

The stakes continue to be high. As the research consistently shows, organizations with higher levels of data literacy consistently outperform their peers across multiple dimensions. But achieving these benefits requires moving beyond surface-level training approaches to create comprehensive organizational transformation.

The path forward involves recognizing that data literacy is fundamentally about developing better ways of thinking about evidence and uncertainty. When we can help people become more sophisticated empirical reasoners—not just more skilled tool users—we create the foundation for sustained competitive advantage in an increasingly data-rich world.

This blog post is the second in our series exploring the deeper dimensions of data literacy. Next, we'll examine specific case studies of organizations that have successfully implemented these approaches and the lessons learned from their experiences.