Building Privacy Programs That Actually Work: Architecture for Human-Centered Data Protection

The Bottom Line Up Front: Successful privacy programs aren't built on policies and training—they're built on organizational structures that make privacy-respecting behaviors the path of least resistance. Most privacy programs fail because they try to layer privacy onto existing systems through rules and restrictions. The programs that succeed embed privacy into the architecture of how work gets done, making protection the default rather than the exception.

The Architecture of Failure: Why Most Privacy Programs Don't Work

Here's a story that repeats itself across organizations worldwide: A company invests heavily in privacy. They hire a Chief Privacy Officer, implement comprehensive training programs, deploy state-of-the-art consent management platforms, and create detailed privacy policies. Employees dutifully complete their annual privacy training, clicking through slides about data minimization and purpose limitation. The privacy team publishes regular updates about GDPR compliance and privacy best practices.

Yet six months later, a routine audit reveals that marketing has been sharing customer data with dozens of third parties without proper controls. Engineering has been logging sensitive user behavior "just in case we need it later." Sales has been enriching contact lists with purchased data that violates their own privacy commitments. The product team has launched features that collect unnecessary personal information because "that's how the competitor does it."

What went wrong? The organization made a fundamental architectural error: they tried to build privacy through addition rather than integration. They added privacy requirements on top of existing workflows, creating friction between "doing the job" and "protecting privacy." When these inevitably conflict, the job wins.

As Stewart Brand writes in How Buildings Learn, "All buildings are predictions. All predictions are wrong." The same applies to privacy programs. Most are built on predictions about how people will behave when given privacy rules. These predictions are almost always wrong because they ignore a fundamental truth: systems shape behavior more powerfully than rules constrain it.

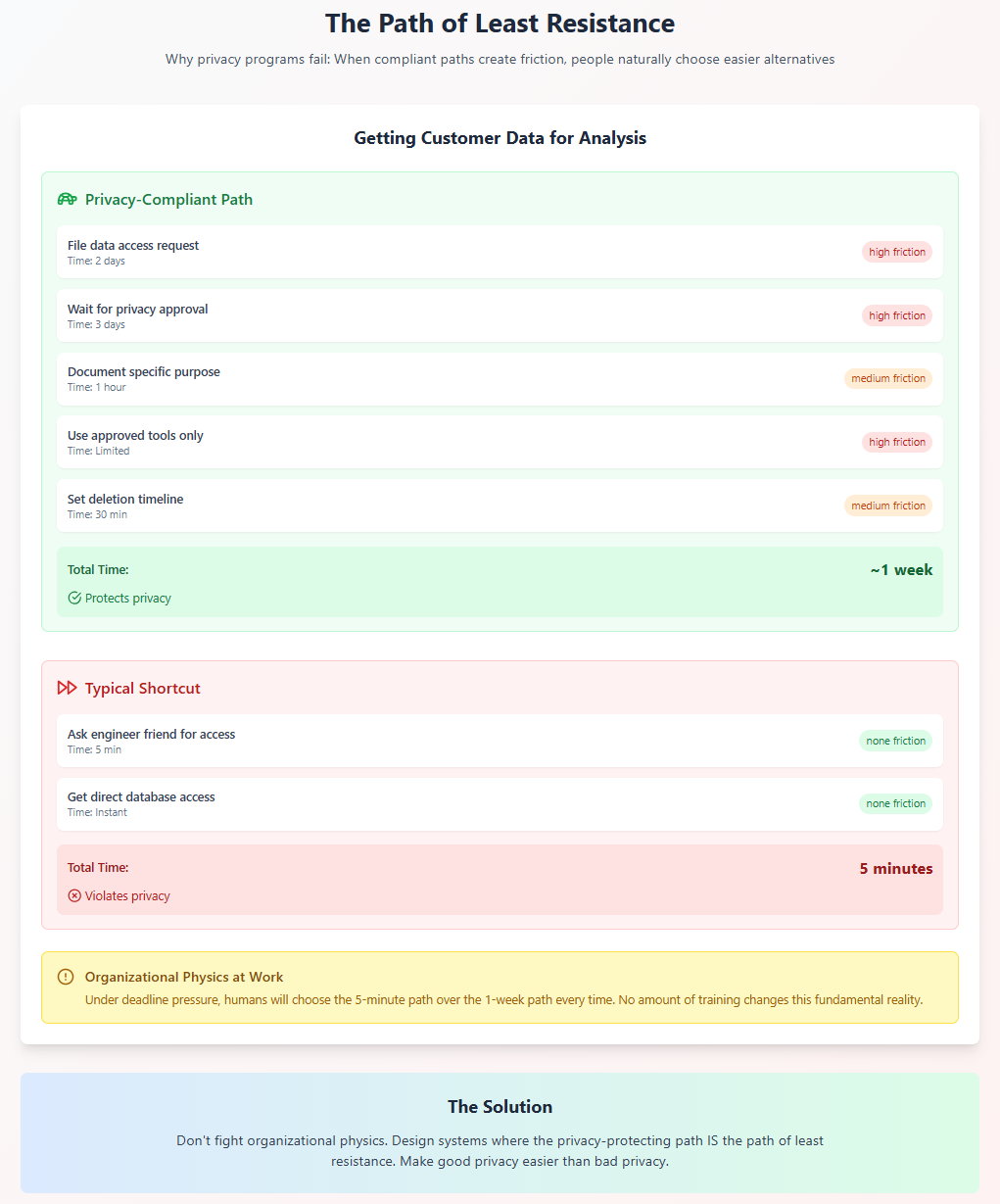

The Path of Least Resistance: Understanding Organizational Physics

Organizations, like physical systems, follow the path of least resistance. Water doesn't flow uphill, and employees don't consistently choose difficult privacy-protecting options over easy privacy-violating ones—no matter how much training they receive.

Donald Norman explores this in The Design of Everyday Things: "When people fail to follow procedures, it is often because the procedures are wrong, not the people." This principle, applied to privacy, reveals why most programs fail. They create procedures that fight against natural workflows rather than aligning with them.

Consider a typical scenario: A product manager needs customer data for analysis. The privacy-compliant path requires:

- Filing a data access request

- Waiting for privacy team approval

- Documenting the specific purpose

- Agreeing to deletion timelines

- Using only approved tools

The non-compliant path requires:

- Asking an engineer friend for database access

Which path will hurried humans under deadline pressure choose? The question answers itself.

The Incentive Misalignment Problem

Charlie Munger famously said, "Show me the incentive and I will show you the outcome." Most privacy programs create perverse incentives that actively discourage privacy protection:

- Speed vs. Privacy: Teams are rewarded for shipping features quickly, not for minimizing data collection

- Growth vs. Restraint: Metrics emphasize user acquisition and engagement, not privacy preservation

- Innovation vs. Caution: "Move fast and break things" cultures penalize the careful consideration privacy requires

- Individual vs. Collective: Performance reviews measure individual achievement, not privacy protection

When your organizational physics rewards privacy violations and penalizes privacy protection, no amount of training or policy can overcome the fundamental forces at work.

The Complexity Cascade

Modern organizations create what Charles Perrow calls "normal accidents" in his book of the same name—failures that emerge from complex system interactions rather than individual mistakes. Privacy violations often follow this pattern:

- System Complexity: Multiple teams touch customer data through different systems

- Tight Coupling: Changes in one system cascade through others automatically

- Cognitive Overload: No one person understands the full data flow

- Emergent Behaviors: Combinations create privacy risks no one anticipated

As James Reason explains in Human Error, "Complex systems contain multiple layers of defenses... accidents occur when holes in these layers momentarily line up." Privacy programs that add layers of rules without addressing system design are just adding more Swiss cheese—more layers with holes that will eventually align.

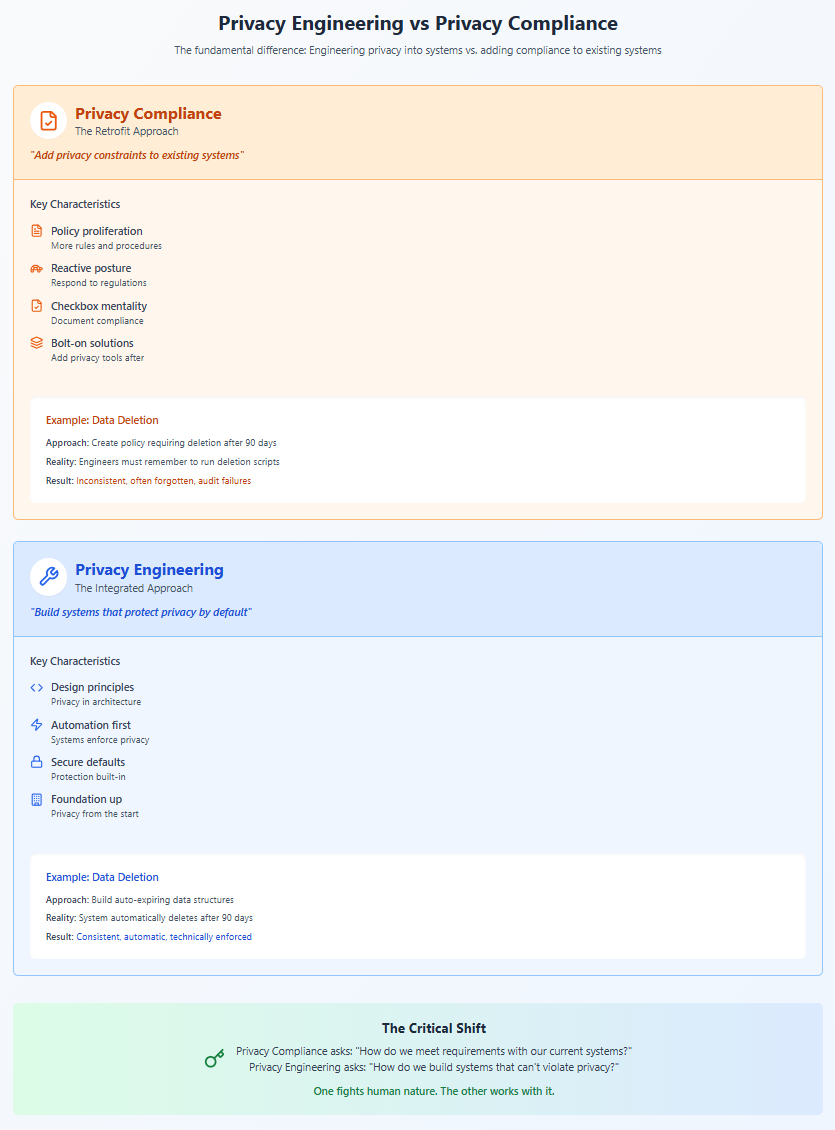

Privacy Engineering vs. Privacy Compliance: Building Protection Into the Foundation

The distinction between privacy engineering and privacy compliance represents a fundamental shift in how we approach privacy protection. It's the difference between building a house with a solid foundation versus trying to waterproof a house built on sand.

Privacy Compliance: The Retrofit Approach

Privacy compliance treats privacy as a constraint to be managed. It asks: "Given our existing systems and processes, how do we meet regulatory requirements?" This leads to:

- Bolt-on Solutions: Privacy tools added after systems are designed

- Policy Proliferation: Ever-growing lists of rules and restrictions

- Checkbox Mentality: Focus on documented compliance over actual protection

- Reactive Posture: Addressing privacy only when regulations require it

As Bruce Schneier observes in Click Here to Kill Everybody, "Security is a process, not a product." The same applies to privacy. Compliance approaches treat privacy as a product—something to be purchased and installed—rather than a process to be designed into everything.

Privacy Engineering: The Integrated Approach

Privacy engineering treats privacy as a design requirement from the start. It asks: "How do we build systems that protect privacy by default?" This leads to fundamentally different outcomes:

Design Principles Over Rules: Instead of saying "don't collect unnecessary data," privacy engineering builds systems that can't collect unnecessary data. The constraint is built into the architecture, not layered on through policy.

Automation Over Training: Rather than training people to make privacy-protective choices, privacy engineering automates those choices. As Cathy O'Neil notes in Weapons of Math Destruction, "Algorithms are opinions embedded in code." Privacy engineering embeds privacy-protective opinions into the code itself.

Defaults Over Decisions: Privacy engineering recognizes what behavioral economists call "default bias"—people overwhelmingly stick with default options. By making privacy-protective choices the default, it leverages human nature rather than fighting it.

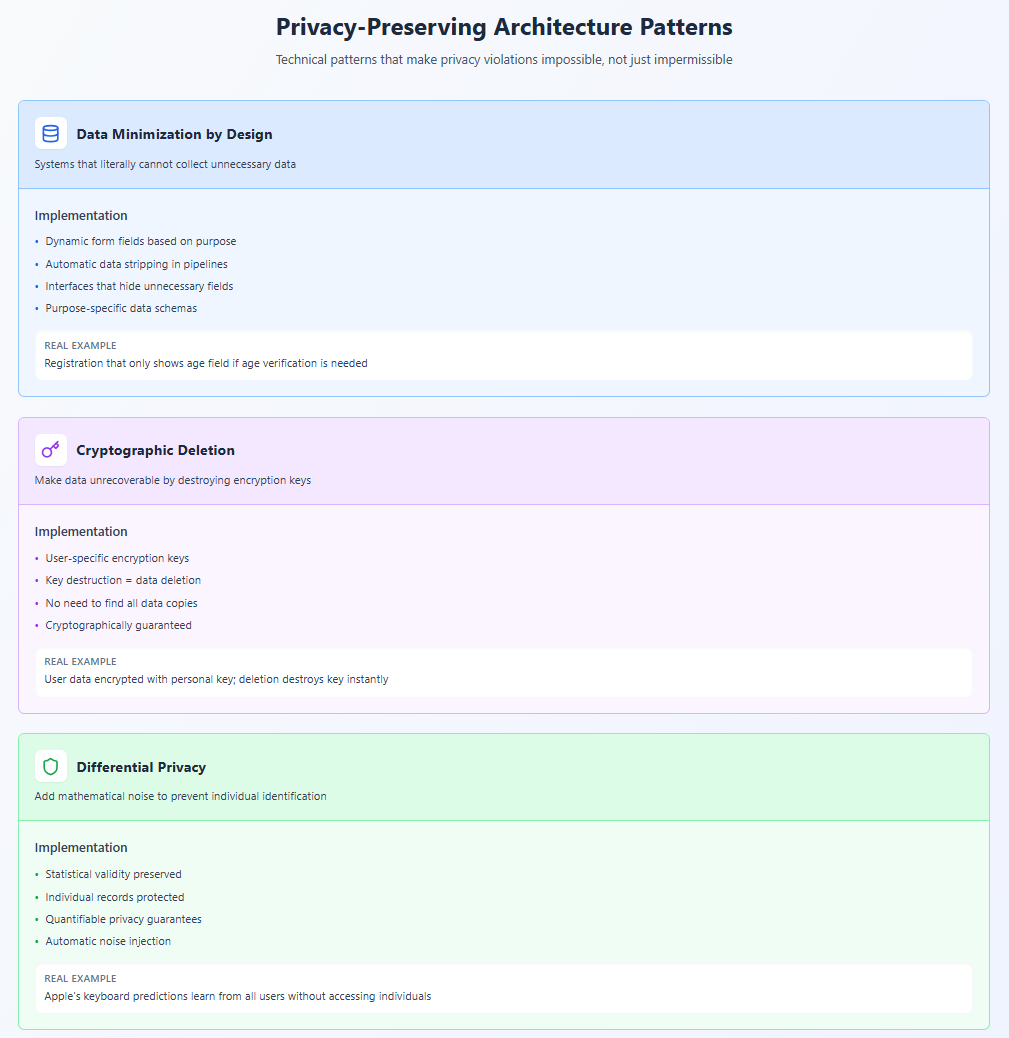

The Architecture Patterns That Work

Successful privacy engineering follows recognizable patterns that create what Christopher Alexander would call a "pattern language" in his architectural theory. Here are the core patterns:

1. Data Minimization by Design

- Systems that literally cannot collect more than necessary

- Interfaces that don't expose fields for unnecessary data

- Pipelines that automatically strip excess information

Example: A registration system that only shows fields for required information, dynamically adjusting based on user choices, rather than showing all fields and marking some "optional."

2. Purpose Binding Architecture

- Technical controls that enforce purpose limitation

- Data access tied to specific, time-bound purposes

- Automatic expiration when purposes are fulfilled

Example: Analytics databases that automatically partition data by purpose and delete partitions when analysis periods end, making it technically impossible to use data beyond its intended purpose.

3. Privacy-Preserving Defaults

- Opt-in rather than opt-out for any data sharing

- Most restrictive settings as defaults

- Progressive disclosure of data features

Example: Communication systems where all conversations are private by default, requiring explicit actions to share more broadly, reversing the typical social media pattern.

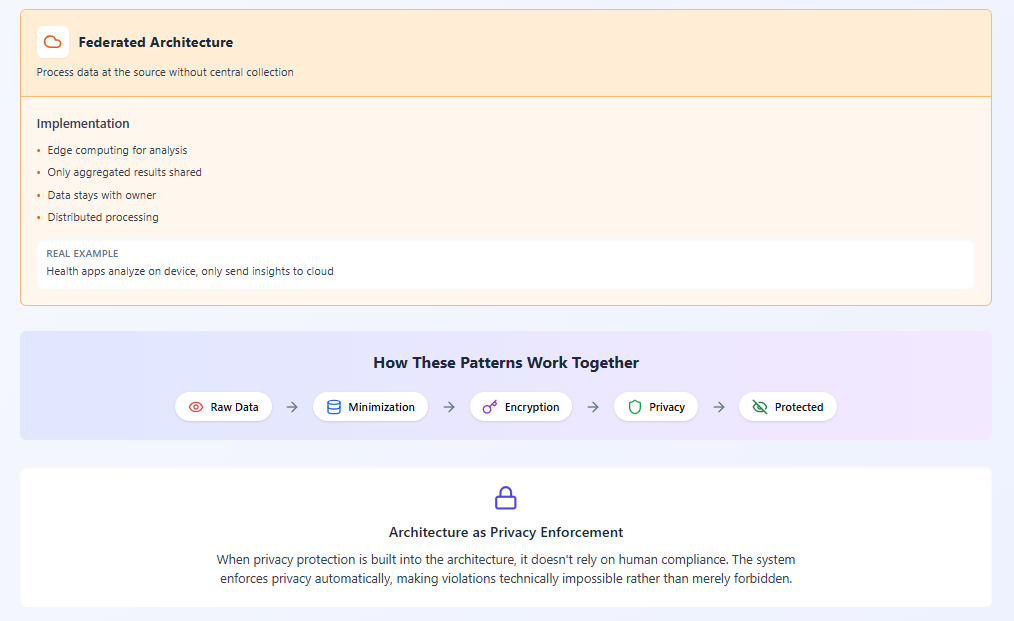

4. Federated Rather Than Centralized

- Data stays close to its source when possible

- Processing happens at the edge

- Aggregation only when necessary

Example: Health monitoring where analysis happens on user devices, sending only aggregated insights to central servers rather than raw biometric data.

Designing Privacy Into Workflows: The Integration Imperative

The most elegant privacy solution is one users don't notice because it's seamlessly integrated into their workflow. This requires understanding how work actually happens, not how policies say it should happen.

The Ethnographic Approach

As anthropologist Clifford Geertz advocated, we need "thick description" of actual work practices to design effective privacy protections. This means:

Shadow and Observe: Watch how people actually handle data, not how they say they handle it. The gaps reveal where privacy failures will occur.

Map Information Flows: Document not just official data flows but informal ones—the Excel files emailed between teams, the screenshots shared in Slack, the "temporary" databases that become permanent.

Identify Friction Points: Find where current privacy requirements create the most friction. These are where workarounds will emerge.

Understand Motivations: Learn why people take shortcuts. Usually, it's not malice but legitimate needs unmet by official channels.

Workflow Integration Patterns

Based on studying successful privacy programs, several integration patterns emerge:

1. Just-in-Time Privacy Instead of front-loading all privacy decisions, integrate them into the workflow when they're relevant:

- Consent requests appear when data sharing is actually needed

- Purpose documentation happens during data access, not before

- Retention decisions are made when creating data, not retroactively

2. Privacy as a Service Rather than making every team implement privacy controls, provide privacy-preserving services they can use:

- Anonymization APIs that teams can call

- Secure data sharing platforms with built-in controls

- Privacy-preserving analytics tools as defaults

3. Ambient Privacy Awareness Make privacy status visible without being intrusive:

- Visual indicators of data sensitivity in interfaces

- Automatic warnings when crossing privacy boundaries

- Privacy impact previews before actions

The GitLab Example: Privacy in Developer Workflows

GitLab provides an instructive example of workflow integration. Rather than creating separate privacy review processes, they embedded privacy into existing developer workflows:

- Merge Request Templates: Include privacy considerations in code review checklists

- CI/CD Integration: Automated privacy checks run alongside security scans

- Issue Templates: Privacy impact assessment questions built into feature planning

As GitLab's CEO Sid Sijbrandij explained, "The best time to address privacy is when developers are already thinking about changes, not as a separate process later."

Creating Incentive Structures That Reward Privacy Protection

Changing organizational physics requires aligning incentives with privacy protection. This goes beyond compliance bonuses to fundamental restructuring of how we measure and reward success.

The Measurement Challenge

"What gets measured gets managed" applies perversely to privacy. Traditional metrics often incentivize privacy violations:

- User engagement (encourages excessive data collection)

- Feature velocity (discourages privacy consideration)

- Data completeness (opposes minimization)

- Personalization effectiveness (requires invasive profiling)

As Jerry Muller warns in The Tyranny of Metrics, "Metric fixation leads to gaming the metrics." Privacy programs need metrics that resist gaming while encouraging protection.

Privacy-Positive Metrics

Successful programs measure outcomes that naturally align with privacy protection:

User Trust Metrics

- Percentage of users who understand data practices (tested, not self-reported)

- Rate of voluntary data sharing vs. required

- User-reported comfort with data handling

- Recommendation likelihood based on privacy practices

Data Efficiency Metrics

- Business value per unit of data collected

- Feature success with minimal data

- Deletion completion rates

- Purpose fulfillment ratios

Protection Effectiveness Metrics

- Time from vulnerability discovery to fix

- Privacy incidents prevented (near-misses)

- Automated vs. manual privacy controls

- Default adoption of privacy features

Incentive Design Patterns

1. Privacy Budget Allocation Like cloud computing costs, give teams "privacy budgets" for data collection:

- Each data point has a privacy cost

- Teams must justify collection within budgets

- Unused budget becomes a performance metric

2. Privacy as a Feature Treat privacy protections as product features in roadmaps:

- Privacy improvements count toward velocity metrics

- Privacy features get equal weight in OKRs

- Privacy becomes part of innovation, not constraint

3. Collective Responsibility Make privacy protection a shared metric:

- Team bonuses tied to organizational privacy health

- Cross-functional privacy goals

- Incident impact affects multiple teams

The Spotify Model: Squads and Privacy

Spotify's autonomous squad model embeds privacy responsibility directly into teams:

- Each squad owns privacy for their features

- Privacy engineers embedded in squads, not centralized

- Privacy metrics included in squad health checks

- Autonomy balanced with shared privacy principles

This distributed model makes privacy everyone's job, not just the privacy team's responsibility.

Technical Architecture for Privacy: Building Systems That Can't Violate Privacy

The most effective privacy protection is making violations technically impossible. This requires architectural choices that embed privacy into the fundamental structure of systems.

The Principle of Least Privilege Architecture

Borrowing from security architecture, privacy systems should grant minimal necessary access:

Data Access Layers

Application Layer → Privacy Mediation Layer → Data Storage Layer

The mediation layer enforces:

- Purpose-based access controls

- Automatic data minimization

- Audit trail generation

- Consent verification

Privacy-Preserving Technical Patterns

1. Differential Privacy by Default Build systems that add mathematical noise to prevent individual identification:

- Aggregate queries return statistically valid but individually anonymous results

- Individual records become mathematically impossible to extract

- Privacy guarantees are quantifiable and auditable

Example: Apple's use of differential privacy for keyboard predictions—learning from user behavior without accessing individual data.

2. Homomorphic Processing Enable computation on encrypted data:

- Analysis happens without decryption

- Data owners maintain control

- Processing and privacy coexist

Example: Healthcare systems analyzing patient data patterns while data remains encrypted and individually inaccessible.

3. Zero-Knowledge Architectures Prove facts without revealing underlying data:

- Age verification without birthdate disclosure

- Income validation without salary exposure

- Credential verification without credential sharing

Example: Privacy-preserving identity systems that verify attributes without revealing unnecessary personal information.

The Architecture of Deletion

GDPR's "right to be forgotten" reveals how few systems are designed for deletion. Privacy-preserving architecture makes deletion natural:

Cryptographic Deletion

- Data encrypted with user-specific keys

- Deletion means destroying keys

- Data becomes cryptographically inaccessible

Tombstone Patterns

- Deletion records propagate through systems

- Derived data automatically expires

- Backups honor deletion commitments

Temporal Architecture

- Data structures with built-in expiration

- Automatic cleanup processes

- Time-based access controls

Real Implementation: Signal's Privacy Architecture

Signal messenger demonstrates privacy-preserving architecture in practice:

Sealed Sender: Messages encrypted so even Signal can't see who's messaging whom Minimal Metadata: Server knows almost nothing about users Local Processing: Contact discovery happens on devices, not servers Forward Secrecy: Compromised keys don't expose past messages

As Signal's Moxie Marlinspike explained: "We've designed the system so we don't have to be trusted."

Case Studies: Organizations Getting It Right

Examining successful privacy programs reveals common patterns in how they integrate privacy into organizational fabric.

Case Study 1: DuckDuckGo - Privacy as Business Model

DuckDuckGo didn't add privacy to a search engine—they built a search engine around privacy:

Architectural Choices:

- No user tracking infrastructure exists to abuse

- Search queries aren't stored or associated

- Revenue through contextual, not behavioral, advertising

Organizational Alignment:

- Every employee understands privacy as core value

- Product decisions evaluated through privacy lens

- Growth strategies that don't compromise privacy

Results: Sustainable growth proving privacy-respecting businesses can succeed

Case Study 2: Basecamp - Privacy Through Simplicity

Basecamp's approach shows how constraints can enhance privacy:

Design Philosophy:

- "It's not possible to do things with Basecamp that you shouldn't do"

- Features deliberately limited to prevent misuse

- Complexity rejected in favor of privacy

Implementation:

- No tracking pixels in emails

- No behavioral analytics

- No data sharing with third parties

- Clear, simple privacy policies

Outcome: Customer trust as competitive advantage

Case Study 3: Mozilla - Privacy in Open Source

Mozilla demonstrates privacy protection through transparency:

Open Architecture:

- Source code available for inspection

- Privacy claims verifiable by anyone

- Community involvement in privacy decisions

Privacy Innovation:

- Firefox containers isolating tracking

- DNS over HTTPS by default

- Enhanced tracking protection

Cultural Integration:

- Privacy part of Mozilla Manifesto

- Employee onboarding emphasizes privacy

- Public privacy advocacy

Building Your Privacy Program: A Practical Framework

Moving from theory to practice requires a systematic approach to building privacy into your organization's DNA.

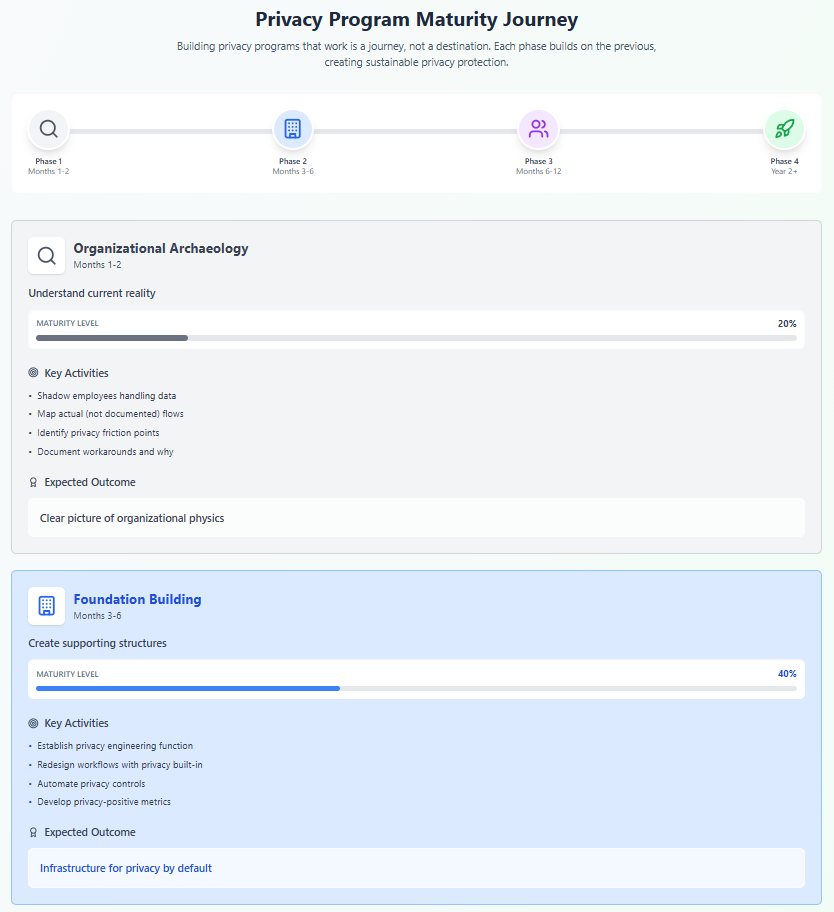

Phase 1: Organizational Archaeology (Months 1-2)

Before building new structures, understand existing ones:

Data Flow Ethnography

- Shadow employees handling data

- Map actual (not documented) data flows

- Identify privacy friction points

- Document workarounds and why they exist

Incentive Analysis

- Catalog what behaviors get rewarded

- Identify metrics driving decisions

- Map promotion criteria

- Understand budget allocations

Technical Architecture Review

- Assess current privacy capabilities

- Identify architectural privacy barriers

- Evaluate technical debt impact

- Map system interdependencies

Phase 2: Foundation Building (Months 3-6)

Create structures that support privacy by default:

Privacy Engineering Function

- Hire/develop privacy engineers (not just lawyers)

- Embed privacy expertise in development teams

- Create privacy architecture review board

- Establish privacy design standards

Workflow Integration

- Redesign key workflows with privacy built-in

- Create privacy-preserving alternatives to current tools

- Automate privacy controls where possible

- Remove friction from privacy-protective paths

Metric Redesign

- Develop privacy-positive success metrics

- Integrate privacy into existing metrics

- Create visibility for privacy successes

- Align incentives with protection

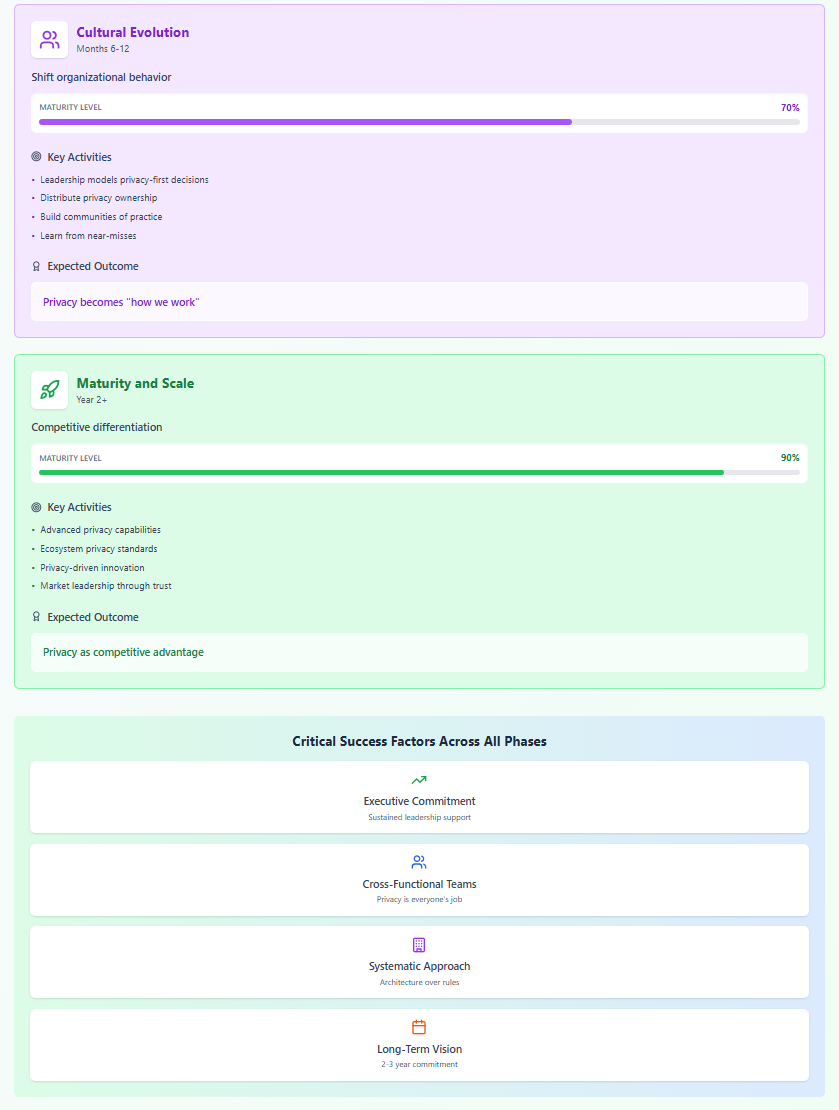

Phase 3: Cultural Evolution (Months 6-12)

Shift organizational physics toward privacy:

Leadership Modeling

- Executives demonstrate privacy-first decisions

- Privacy considerations in strategic planning

- Public commitment to privacy principles

- Resource allocation reflecting priorities

Distributed Ownership

- Privacy responsibilities in every role

- Team-level privacy health metrics

- Cross-functional privacy champions

- Community of practice development

Continuous Improvement

- Regular privacy retrospectives

- Learning from near-misses

- External privacy advisory input

- Evolution based on outcomes

Phase 4: Maturity and Scale (Year 2+)

Sustain and expand privacy protection:

Advanced Capabilities

- Privacy-preserving analytics

- Automated privacy impact assessment

- Real-time privacy monitoring

- Predictive privacy risk modeling

Ecosystem Extension

- Privacy requirements for partners

- Industry collaboration on standards

- Open source privacy tools

- Knowledge sharing with community

Innovation Integration

- Privacy as competitive differentiator

- New features designed around privacy

- Business model evolution

- Market leadership through trust

The Architecture of Trust: Why This Matters

Building privacy programs that work isn't just about compliance or risk management—it's about creating the conditions for sustainable digital relationships. As Kevin Kelly writes in The Inevitable, "Trust is the most important currency in the digital age."

The Compound Effect of Good Architecture

Well-architected privacy programs create compound benefits:

Trust Accumulation: Each privacy-respecting interaction builds trust reserves Innovation Freedom: Strong privacy foundation enables bolder experiments Competitive Moat: Trust, once earned, is hard for competitors to replicate Regulatory Resilience: Principles-based approach adapts to new regulations Cultural Strength: Privacy-first culture attracts privacy-conscious talent

The Cost of Architectural Failure

Conversely, poorly architected programs compound problems:

Trust Bankruptcy: Single violation can destroy years of relationship building Innovation Paralysis: Fear of privacy violations stifles experimentation Competitive Vulnerability: Privacy scandals create market opportunities for rivals Regulatory Whack-a-Mole: Reactive compliance always behind requirements Cultural Corruption: Privacy violations normalize, creating ethical drift

The Deeper Truth About Privacy Programs

As we've explored, successful privacy programs aren't built through policies, training, or compliance checklists. They're built through organizational architecture that makes privacy protection the natural outcome of doing business.

This requires a fundamental shift in thinking. Instead of asking "How do we get people to follow privacy rules?" we must ask "How do we design systems where privacy protection is easier than privacy violation?" Instead of adding privacy controls to existing systems, we must build new systems with privacy at their core.

The challenge isn't technical—we have the tools and patterns needed for privacy-preserving systems. The challenge is organizational—changing the structures, incentives, and cultures that shape behavior. This is harder than implementing technology but more important for sustainable privacy protection.

As architect Buckminster Fuller observed, "You never change things by fighting the existing reality. To change something, build a new model that makes the existing model obsolete." The same applies to privacy programs. We can't bolt privacy onto systems designed for surveillance. We must build new systems designed for protection.

The organizations succeeding at privacy aren't those with the most comprehensive policies or the largest privacy teams. They're those that have integrated privacy so deeply into their organizational architecture that protection happens naturally, almost invisibly, as a byproduct of doing business.

This is the future of privacy programs—not as compliance functions managing constraints, but as architectural practices building systems that respect human autonomy by design. When we get this right, privacy protection becomes not a burden to bear but a capability that enables innovation, builds trust, and creates sustainable competitive advantage.

The choice is ours. We can continue building privacy programs that fight against organizational physics, creating friction and failure. Or we can redesign our organizations to make privacy protection the path of least resistance. The technical patterns exist. The successful examples demonstrate viability. What's needed now is the organizational will to build systems that can't violate privacy because they're not designed to.

Privacy protection at scale isn't about making thousands of correct decisions. It's about building systems where the correct decision is the easy decision. That's the architecture of privacy that actually works.

This is the third in our series exploring data privacy from philosophical and practical perspectives. Next, we'll examine how to measure privacy program effectiveness—moving beyond compliance metrics to understand whether we're actually protecting human autonomy in digital spaces.